Contents

Fine-Tuning Vision-Language Models (VLMs)

Geo-spatial Embeddings

Importance of Fine-Tuning VLM in Data Curation

Fine-Tuning CLIP with RSICD

Significance of Fine-Tuning CLIP with RSICD

Encord Blog

Fine-Tuning VLM: Enhancing Geo-Spatial Embeddings

Contents

Fine-Tuning Vision-Language Models (VLMs)

Geo-spatial Embeddings

Importance of Fine-Tuning VLM in Data Curation

Fine-Tuning CLIP with RSICD

Significance of Fine-Tuning CLIP with RSICD

Written by

Akruti Acharya

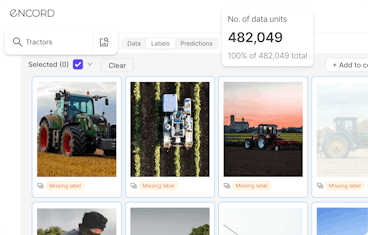

View more postsAs the world generates an ever-expanding volume of visual content, the need for efficient data curation becomes increasingly important. Whether it’s satellite imagery, aerial photographs, or remote sensing data, organizing and annotating these visuals is essential for scientific research, urban planning, disaster response, and more.

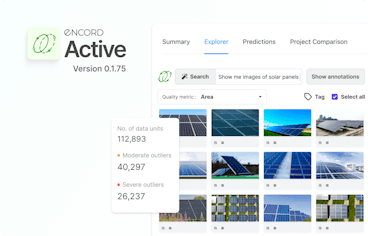

In this blog post, we explore how fine-tuning the Contrastive Language-Image Pre-Training or CLIP model with the RSICD dataset—a collection of remote sensing images and captions—revolutionizes how we curate geospatial data. Unlike traditional image processing methods, CLIP offers advanced capabilities like semantic search and multilingual annotations, improving the processing and analysis of geospatial information.

Fine-Tuning Vision-Language Models (VLMs)

Fine-tuning Vision-Language Models (VLM) to enhance embeddings is a cutting-edge approach to data curation. VLMs are advanced models that combine visual and textual understanding, making them incredibly powerful tools for processing and analyzing multimedia data.

By fine-tuning these models specifically for geospatial tasks, we aim to improve location-based data processing and analysis accuracy and efficiency.

Geo-spatial Embeddings

Geo-spatial embeddings refer to representations of geographical locations in a continuous vector space, where each location is encoded as a vector with semantic meaning. These embeddings are crucial for various applications such as geographical information systems (GIS), location-based recommendation systems, urban planning, environmental monitoring, and disaster response.

However, generating accurate geospatial embeddings from heterogeneous data sources poses significant challenges due to the complexity and diversity of spatial information.

At Encord, we address these challenges by fine-tuning VLMs like CLIP to produce more accurate and semantically rich geospatial embeddings. This can help streamline your data curation process with new possibilities for using geospatial data.

Importance of Fine-Tuning VLM in Data Curation

The importance of fine-tuning VLMs in data curation can be understood through several key aspects:

Semantic Understanding

VLMs are capable of understanding and interpreting both visual and textual information simultaneously. By fine-tuning these models on specific datasets relevant to a particular domain, such as medical imaging or satellite imagery, they can learn to associate visual features with corresponding textual descriptions.

This semantic understanding greatly enriches the curated data by providing context and meaning to the information being processed. So, the annotators can quickly identify and tag images based on textual descriptions, improving dataset organization and curation.

Adaptability to Domain-Specific Requirements

Different domains have unique data characteristics and requirements. Fine-tuning VLMs allows for customization and adaptation to these domain-specific needs. For example, here we are fine-tuning the VLM model to improve geospatial embeddings.

Improved Data Accuracy

Fine-tuning VLMs enables them to better capture the complexities of curated data. This results in improved relevance and accuracy of the curated datasets as the models learn to extract and highlight the most relevant features and information. Consequently, curated datasets become more valuable for downstream tasks such as machine learning, analytics, and decision-making processes.

Fine-Tuning CLIP with RSICD

CLIP

Contrastive Language-Image Pre-training or CLIP, developed by OpenAI, is a powerful multimodal model that bridges the gap between natural language and visual content. It learns to associate images and their corresponding captions in a self-supervised manner, enabling it to perform tasks like image search, zero-shot classification, and more.

RSICD Dataset

The Remote Sensing Image Caption Dataset or RSICD serves as our training ground. Comprising approximately 10,000 satellite images, this dataset features image labels and descriptive captions. These captions provide valuable context, making RSICD an ideal candidate for fine-tuning CLIP.

Why Fine-Tune CLIP with RSICD?

Geo-Spatial Specificity

Satellite images differ significantly from everyday photos. They are satellite-captured images that differ from typical ground-level images in scale, perspective, and resolution. By fine-tuning CLIP with RSICD, we tailor the model to understand the complexities of geospatial data. This specificity enhances its ability to handle satellite imagery effectively.

Strengthen Search Ability

By incorporating captions during fine-tuning, we ensure that the model cohesively embeds both image and text information. Consequently, CLIP becomes adept at natural language search and image retrieval.

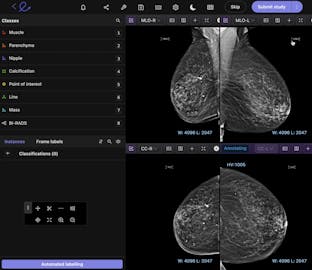

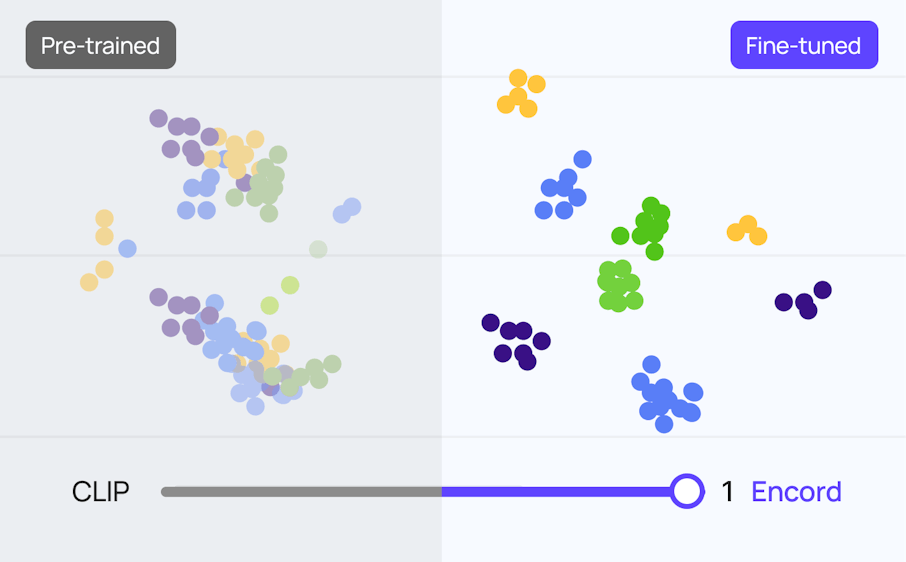

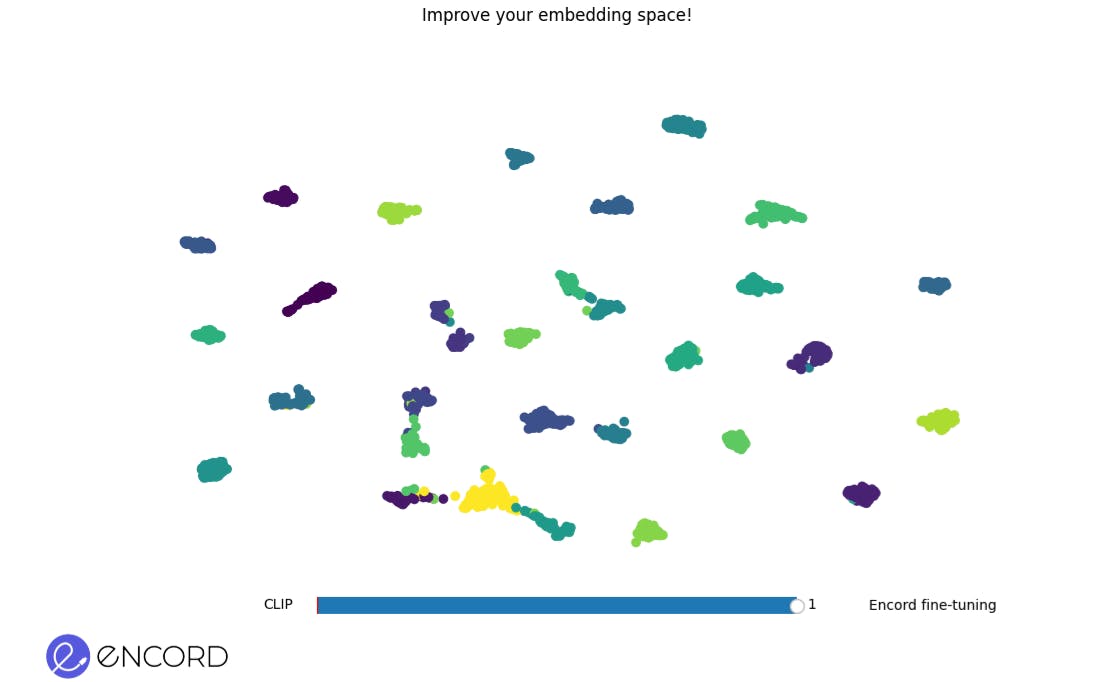

Embedding Space Before Fine-Tuning. The scattered arrangement of clusters represents data points in the initial embedding space.

Embedding Space After Fine-Tuning. A more refined and cohesive grouping of data points indicates an improved embedding space post-fine-tuning.

Zero-Shot Performance Evaluation

We evaluate the model’s zero-shot performance using ground truth labels. This involves assessing whether the textual embeddings align with the image embeddings. Such alignment validates the consistency of CLIP’s image-text capabilities.

🔥 NEW RELEASE: We released TTI-Eval (text-to-image evaluation), an open-source library for evaluating zero-shot classification models like CLIP and domain-specific ones like BioCLIP against your (or HF) datasets to estimate how well the model will perform. Get started with it on GitHub, and do ⭐️ the repo if it's awesome. 🔥

🔥 NEW RELEASE: We released TTI-Eval (text-to-image evaluation), an open-source library for evaluating zero-shot classification models like CLIP and domain-specific ones like BioCLIP against your (or HF) datasets to estimate how well the model will perform. Get started with it on GitHub, and do ⭐️ the repo if it's awesome. 🔥Significance of Fine-Tuning CLIP with RSICD

Geo-Spatial Annotation Precision

- Contextual Understanding: RSICD provides satellite images alongside descriptive captions. By fine-tuning CLIP, we enhance its ability to understand the nuances of geospatial features—mountains, rivers, forests, urban areas, and more.

- Accurate Labeling: Curators can annotate images with greater precision. Whether identifying specific land cover types or pinpointing landmarks, CLIP ensures context-aware annotations.

Efficient Data Exploration

- Semantic Search: Curators and researchers can query the dataset using natural language. CLIP retrieves relevant images based on textual descriptions. For instance, searching for “coastal erosion” yields coastal satellite imagery.

- Time Savings: Manual exploration of thousands of images becomes streamlined. CLIP acts as a smart filter, presenting relevant visuals promptly.

Consistent Labeling and Quality Control

- Alignment of Embeddings: During fine-tuning, CLIP learns to align image embeddings with textual embeddings. Curators can cross-check whether the textual descriptions match the visual content.

- Uniform Annotations: Consistent labeling improves model training and downstream tasks. Whether detecting deforestation or urban sprawl, CLIP ensures uniformity.

In summary, fine-tuning CLIP with RSICD empowers data curators by providing efficient search, consistent labeling, multilingual support, and domain-specific expertise. As we embrace this powerful tool, we pave the way for smarter, more accessible datasets.

🔥 NEW RELEASE: We released TTI-Eval (text-to-image evaluation), an open-source library for evaluating zero-shot classification models like CLIP and domain-specific ones like BioCLIP against your (or HF) datasets to estimate how well the model will perform. Get started with it on GitHub, and do ⭐️ the repo if it's awesome. 🔥

🔥 NEW RELEASE: We released TTI-Eval (text-to-image evaluation), an open-source library for evaluating zero-shot classification models like CLIP and domain-specific ones like BioCLIP against your (or HF) datasets to estimate how well the model will perform. Get started with it on GitHub, and do ⭐️ the repo if it's awesome. 🔥Build better ML models with Encord

Get started todayWritten by

Akruti Acharya

View more postsRelated blogs

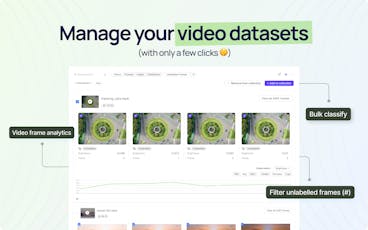

Announcing the launch of Advanced Video Curation

At Encord we continually look for ways to enable our customers to bring their models to market faster. Today, we’re announcing the launch of Video Data Management within the Encord Platform, providing an entirely new way to interact with video data. Gone are the days of searching frame by frame for the relevant clip. Now filter and search across your entire dataset of videos with just a few clicks. What is Advanced Video Curation? In our new video explorer page users can search, filter, and sort entire datasets of videos. Video-level metrics, calculated by taking an average from the frames of a video, allow the user to curate videos based on a range of characteristics, including average brightness, average sharpness, the number of labeled frames, and many more. Users can also curate within individual videos with the new video frame analytics timelines, enabling a temporal view over the entire video. We're thrilled that Video Data Curation in the Encord platform is the first and only platform available to search, query, and curate relevant video clips as part of your data workflows. Support within Encord This is now available for all Encord Active customers. Please see our documentation for more information on activating this tool. For any questions on how to get access to video curation please contact sales@encord.com.

Apr 24 2024

2 M

Setting Up a Computer Vision Testing Platform

When machine learning (ML) models, especially computer vision (CV) models, move from prototyping to real-world application, they face challenges that can hinder their performance and reliability. Gartner's research reveals a telling statistic: just over half of AI projects make it past the prototype stage into production. This underlines a critical bottleneck—the need for rigorous testing. Why do so many models fail to make it to real-world applications? At Encord, ML teams tell us that model performance bottlenecks include: the complexity of ML models and diverse datasets, the need for testing processes that can handle large amounts of data, the need for automation to handle repetitive tasks, and the need for teams to collaborate to improve ML systems. This article will teach you the intricacies of setting up a computer vision (CV) testing platform. You will gain insights into the essence of thorough test coverage—vital for the unpredictable nature of CV projects—and learn about managing test cases effectively. You will also learn how collaborative features can be the centerpiece of successful testing and validation. By the end of the article, you should understand what it takes to set up a CV testing platform. Challenges Faced by Computer Vision Models in Production Computer Vision (CV) models in dynamic production environments frequently encounter data that deviates significantly from their training sets—be it through noise, missing values, outliers, seasonal changes, or general unpredictable patterns. These deviations can introduce challenges that compromise model performance and reliability. Building reliable, production-ready models comes with its own set of challenges. In this section, you will learn why ensuring the reliability of CV models is a complex task. We are going to look at the following factors: Model Complexity: The intricate architecture of CV models can be challenging to tune and optimize for diverse real-world scenarios. Hidden Stratification: Variations within classes the model hasn't explicitly trained on can lead to inaccurate predictions. Overfitting: A model might perform exceptionally well on the training data but fail to generalize to new, unseen data. Model Drift: Changes in real-world data over time can gradually decrease a model's accuracy and applicability. Adversarial Attacks: Deliberate attempts to fool models using input data crafted to cause incorrect outputs. Understanding these challenges is the first step toward building robust, production-ready CV models. Next, we will explore strategies to mitigate these challenges, ensuring your models can withstand the rigors of real-world application. 🚀 Model Complexity As CV models, particularly visual foundation models (VFMs), visual language models (VLMs), and multimodal AI models, grow in complexity, they often become 'black boxes.' This term refers to the difficulty in understanding how these models make decisions despite their high accuracy. Because these models have complicated, multi-layered architectures with millions of parameters, it is hard to figure out the reasoning behind their outputs. Confidence in the model's performance can be challenging, mainly when it produces unexpected predictions. Consider a security surveillance system with advanced visual recognition to spot suspicious activity. This system, powered by a complex visual language model (VLM), is trained on lots of video data encompassing various scenarios from numerous locations and times. The system can accurately identify threats like unattended bags in public spaces and unusual behavior, but its decision-making process is unclear. Security personnel may struggle to understand why the system flags a person or object as suspicious. The model may highlight factors like an object's size, shape, or movement patterns, but it is unclear how these factors are synthesized to determine a threat. This opacity raises concerns about the model's trustworthiness and the potential for false positives or negatives. The lack of interpretability in such CV models is not just an academic issue but has significant real-world consequences. It affects the confidence of those relying on the system for public safety, potentially leading to mistrust or misinterpretation of the alerts generated. Want to dig deeper into these models? Watch our webinar, ‘Vision Language Models: Powering the Next Chapter in AI.’ Hidden Stratification Precision, accuracy, recall, and mean Average Precision (mAP) are commonly used metrics when evaluating the performance of CV models. However, it's important to remember that these metrics may not provide a complete picture of the model's performance. A model could be very accurate when trained on a specific dataset, but if that dataset doesn't represent the real-world scenario, the model may perform poorly. This dilemma is called hidden stratification. Hidden stratification occurs when the training data doesn't have enough representative examples of certain groups or subgroups. For instance, a model trained on a dataset of images of primarily Caucasian patients may struggle to accurately diagnose skin cancer in black patients. This could raise serious inclusivity concerns, especially in mission-critical applications. See Also: The ultimate guide to data curation in computer vision. Overfitting A model could learn so well from the training data that it cannot make correct predictions on new data, which could lead to wrong predictions on real-world data in production systems. You have probably encountered this before: You train a model to classify images of cats and dogs with a 1000-image dataset split evenly between the two classes and trained for 100 epochs. The model achieves a high accuracy of 99% on the training data but only manages 70% accuracy on a separate test dataset. The discrepancy suggests overfitting, as the model has memorized specific details from the training images, like ear shape or fur texture, rather than learning general features that apply to all cats and dogs. Model Drift You consider a model “drifting” when its predictive accuracy reduces over time when deployed to production. If you do not build your ML system so that the model can adapt to real-world data changes, it might experience sudden drifts or slow decay over time, depending on how your business patterns change. One practical example is to consider an autonomous vehicle's pedestrian detection system. Initially trained on extensive datasets covering various scenarios, such a system might still experience model drift due to unforeseen conditions, like new types of urban development or changes in pedestrian behavior over time. For instance, introducing electric scooters and their widespread use on sidewalks presents new challenges not in the original training data, potentially reducing the system's accuracy in identifying pedestrians. Recommended Read: Best Practices to Improve ML Model Performance and Mitigate Model Drfit. Adversarial Attacks Adversarial attacks consist of deliberately crafted inputs that fool models into making incorrect predictions. These attacks threaten ML applications, from large language models (LLMs) to CV systems. While prompt injection is a known method affecting text-based models, CV models face similar vulnerabilities through manipulated images (image perturbation) or objects within their field of view. A notable demonstration of this was by researchers at the University of California, Berkeley, in 2016. They executed an adversarial attack against a self-driving car system using a simple sticker, misleading the car's vision system into misidentifying the type of vehicle ahead. This manipulation caused the self-driving car to stop unnecessarily, revealing how seemingly innocuous input data changes can impact decision-making in CV applications. Adversarial attacks are challenging because of their subtlety and precision. Only minor alterations are often needed to deceive an AI system, making detection and prevention particularly challenging. This underscores the critical importance of rigorously testing ML models to identify and mitigate such vulnerabilities. You can make CV systems more resistant to these attacks by testing them thoroughly and using adversarial simulation as part of your process for reliable applications. Testing Computer Vision Models and Applications Testing CV applications is more complex than testing traditional software applications. This is because the tests only partially depend on the software. Instead, they rely on factors such as the underlying business problem, dataset characteristics, and the models you trained or fine-tuned. Therefore, establishing a standard for testing CV applications can be complex. Understanding the Computer Vision Testing Platform A CV test platform forms the backbone of a reliable testing strategy. It comprises an ecosystem of tools and processes that facilitate rigorous and efficient model evaluation. The platform can help teams automate the testing process, monitor test results over time, and rectify issues with their models. Essential components of a robust CV testing platform include: Test Data Management: Involves managing the test data (including versioning and tracing lineage) to mirror real-world scenarios critical for models to understand such conditions. With this component, you can manage the groups and sub-groups (collections) to test your model against before to ensure production readiness. Test Reporting: An effective reporting system (dashboards, explorers, visualizations, etc.) is instrumental in communicating test outcomes to stakeholders, providing transparency, and helping to track performance over time. Model Monitoring: The platform should also include a component that monitors the model's performance in production, compares it against training performance, and identifies any problems. The monitoring component can track data quality, model metrics, and detect model vulnerabilities to improve the model’s robustness against adversarial attacks. Test Automation: Setting up automated testing as part of a continuous integration, delivery, and testing (CI/CD/CT) pipeline allows you to configure how you validate the model behavior. This ensures that models work as expected by using consistent and repeatable tests. Recommended Read: New to model monitoring? Check out our guide to ML model observability. Setting Up Your Computer Vision Testing Platform Having established what the CV testing platform is and its importance, this section will describe what a good platform setup should look like. 1. Define Test Cases In ML, test cases are a set of conditions used to evaluate an ML model's performance in varying scenarios and ensure it functions as expected. Defining robust model test cases is crucial for assessing model performance and identifying areas to improve the model’s predictive abilities. For instance, you trained a model on diverse driving video datasets and parking lot videos. You then used it on a dashcam system to count the number of vehicles while driving and in a parking lot. The successfully trained model performs admirably in Boston with cameras installed on various dashcams and across parking lots. An example of the Berkley Diverse Driving Dataset in Encord Active. Stakeholders are satisfied with the proof-of-concept and are asking to scale the model to include additional cities. Upon deploying the model in a new area in Boston and another town, maybe Minnesota, new scenarios emerge that you did not consider. In one parking garage in Boston, camera images are slightly blurred, contrast levels differ, and vehicles are closer to the cameras. In Minnesota, snow is on the ground, the curbside is different, various lines are painted on the parking lot, and new out-of-distribution car models (not in the training data) are present. Production scenario for the CV model in a Minnesota snowy parking lot (left) and Boston parking house in a dashcam (right). These scenarios are strange to the model and will harm its performance. That is why you should consider them test cases when testing or validating the model's generalizability. Defining the test cases should begin with preparing a test case design. A test case design is the process of planning and creating test cases to verify that a model meets its requirements and expected behavior. It involves identifying what aspects of the ML model need to be tested and how to test them. Recommended Read: Model Test Cases: A Practical Approach to Evaluating ML Models. Steps in test case design Define test objectives: Clearly state what the tests are expected to achieve. This starts with identifying failure scenarios, which may include a wide range of factors, such as changing lighting conditions, vehicle types, unique perspectives, or environmental variations, that could impact the model's performance. For example, in a car parking management system, some of the potential edge cases and outliers could include snow on the parking lot, different types of lines painted on the parking lot, new kinds of cars that weren't in the training data, other lighting conditions at varying times of day, different camera angles, perspectives, or distances to cars, and different weather conditions, such as rain or fog. By identifying scenarios where the model might fail, you can develop test cases that evaluate the model's ability to handle these scenarios effectively. After defining the test objectives, the next step is selecting test data for each case. See Also: How to Analyze Failure Modes of Object Detection Models for Debugging. Select test data and specify test inputs: When selecting input data, consider a diverse range of scenarios and conditions. This ensures that the data is representative of the defined test cases, providing a comprehensive understanding of the system or process being analyzed. Be sure to include edge cases in your selection, as they can reveal potential issues or limitations that may not be apparent with only typical data. In the car parking management system above, obtain samples of video images from different locations and parking lot types. Determine expected ML model outcomes and behaviors: Specify each test case's expected results or behaviors. This includes defining what the model should predict or what the software should do in response to specific inputs. Based on the failure mode scenarios of the model in the car parking management system, here are some recommendations: The model should achieve a mean Average Precision (mAP) of at least 0.75 for car detection when cars are partially covered or surrounded by snow and in poorly lit parking garages. The model's accuracy should be at least 98% for partially snow-covered parking lines. Create test cases: Document each test case with inputs, actions, and expected outcomes for clear and effective evaluation. Execute test cases: Execute the prepared test cases systematically to evaluate the ML model. Where possible, utilize automated testing to ensure efficiency and consistency. Record the actual outcomes to facilitate a detailed comparison with the expected results. Analyzing results: Review the outcomes using established metrics such as precision, recall, and f1-score. Document any deviations and conduct a thorough analysis to uncover the root cause of each discrepancy. Common issues may include model overfitting, data bias, or inadequate training. Useful Read: 5 Ways to Reduce Bias in Computer Vision Datasets. Iterative improvement: Upon identifying any issues, take corrective actions such as adjusting the model's hyperparameters, enriching the dataset with more samples and subsets, or refining the features. After modifications, re-run the test cases to verify improvements. This iterative process is essential for achieving the desired model performance and reliability. Keep iterating through this process until the model's performance aligns with the objectives defined in your test cases. 2. Compute Environment Most CV tests involving complex models and large datasets are computationally intensive. Adequate computing resources are essential for efficient and effective testing. Without these resources, you may encounter scalability issues, an inability to manage large visual test datasets, longer testing times, crashing sessions, insufficient test coverage, and a higher risk of errors. Strategies for ensuring adequate compute resources for CV testing: Resource estimation: Begin assessing the computational load by considering the model's size and complexity, dataset volume, and the number of tests. This will help in estimating the required resources to ensure tests run smoothly. Using cloud computing: Use services from cloud providers such as AWS, Azure, or GCP. These platforms provide scalable resources to accommodate varying workloads and requirements. Tools like Encord Active—a comprehensive CV testing and evaluation platform—streamline the process by connecting to cloud storage services (e.g., AWS S3, Google Cloud Storage, Azure Blob Storage) to retrieve test data. Distributed computing: Use distributed computing frameworks like Apache Spark to distribute CV tests across multiple machines. This can help reduce the time it takes to execute the tests. Optimization of tests: Optimize your CV tests by choosing efficient algorithms and data structures to minimize the computational resources required. ML teams can ensure their models are fully tested and ready for production by carefully planning how to use modern cloud-based solutions and distributed computing. 3. Running Tests and Analyzing Results For a smooth CV testing process, follow these comprehensive steps: Data and code preparation: Transfer the test data and code to the computing environment using secure file transfer methods or uploading directly to a cloud storage service. Install dependencies: Install the CV testing framework or tool you have chosen to work with and any additional libraries or tools required for your specific testing scenario. Configure the test environment: Set the necessary environment variables and configuration parameters. For example, define database connection strings, store secrets, or specify the path to the dataset and model artifacts. Execute tests: Run the tests manually or through an automation framework. Encord Active, for instance, can facilitate test automation by computing quality metrics for models based on the predictions and test data. Collect and analyze results: Gather the test outputs and logs, then analyze them to evaluate the model's performance. This includes mAP, Mean Square Error (MSE), and other metrics relevant to the use case and model performance. 4. Automating ML Testing with Continuous Integration, Delivery, and Testing (CI/CD/CT) Continuous integration, delivery (or deployment), and testing for CV automates the process of building, testing, and deploying the models. This automation is crucial in ensuring that models are reliable and issues are identified and resolved early on. Steps for a robust CI/CD/CT pipeline in ML: Pipeline trigger: Automate the pipeline to trigger upon events like code commits or set it for manual initiation when necessary. Code repository cloning: The pipeline should clone the latest version of the codebase into the test environment, ensuring that tests run on the most current iteration. Dependency installation: The pipeline must automatically install dependencies specific to the model, such as data processing libraries and frameworks. Model training and validation: In addition to training, the pipeline should validate the ML model using a separate dataset to prevent overfitting and ensure that the model generalizes well. Model testing: Implement automated tests to evaluate the model's performance on out-of-distribution, unseen data, focusing on the model metrics. Model deployment: The pipeline could automatically ship the model upon successful testing. Depending on the pipeline configuration, this may involve a soft deployment to a staging environment or a full deployment to production. Platforms like GitHub Actions, CircleCI, Jenkins, and Kubeflow offer features that cater to the iterative nature of ML workflows, such as experiment tracking, model versioning, and advanced deployment strategies. Advantages of CI/CD/CT for computer vision Enhanced model quality: Rigorous testing within CI/CT pipelines contributes to high-quality, reliable models in production environments. Reduced error risk: Automation minimizes human error, especially during repetitive tasks like testing and deployment. Efficiency in development: Automating the build-test-deploy cycle accelerates development and enables rapid iteration. Cost-effectiveness: The practices reduce resource waste, translating to lower development costs. Best practices By incorporating best practices and being mindful of common pitfalls, you can make your pipeline robust and effective. These practices include: Ensure your pipeline includes: Data and model versioning to track changes over time. Comprehensive test suites that mirror real-world data and scenarios. Regular updates to the test suite reflect new insights and data. Pitfalls to avoid: Avoid underestimating the complexity of models within the CI pipeline. Prevent data leakage between training and validation datasets. Ensure that the CI pipeline is equipped to handle large datasets efficiently. Throughout this article, you have explored the entire workflow for setting up a testing platform. You might have to configure and maintain several different components. Setting these up might require cross-functional and collaborative development and management efforts. So, most teams we have worked with often prefer using a platform incorporating all these features into one-click or one-deploy configurations. No spinning up servers, using tools that are not interoperable, or maintaining various components. Enter CV testing platforms! Using Platforms for Testing Computer Vision Models Over Building One Various platforms offer tools for testing ML models. Some examples are Encord Active, Kolena, Robust Intelligence, and Etiq.ai. Encord Active, for instance, excels at debugging CV models using data-centric quality metrics to uncover hidden model behaviors. It provides a suite of features for organizing test data, creating Collections to analyze model performance on specific data segments, and equipping teams to devise comprehensive tests. With Active Cloud, you can manage test cases and automatically compute metrics for your models through a web-based platform or the Python client SDK (to import model predictions). Conclusion: Using A Robust Testing Platform Throughout this article, you have learned that a robust testing platform is vital to developing reliable and highly-performant computer vision models. A well-set-up testing platform ensures comprehensive test coverage, which is crucial for verifying model behavior under diverse and challenging conditions. Managing your test cases and seamless team collaboration is also essential for addressing issues like hidden stratification—where models perform well on average but poorly on subgroups or slices—overfitting, and model drift over time. Remember to document the process and results of your accountability tests to inform future testing cycles. Regularly reviewing and refining your test strategy is key to maintaining an effective model development lifecycle. With the continuous advancements in traditional and foundation ML models over the next few years, we expect the integration of robust testing platforms to become increasingly critical. They will be pivotal in driving the success of LLM and ML applications, ensuring they deliver ongoing value in real-world scenarios. Your ML team's goal should be clear: to enable the development of CV models that are not only high-performing but also resilient and adaptable to the ever-changing data landscape they encounter.

Apr 09 2024

8 M

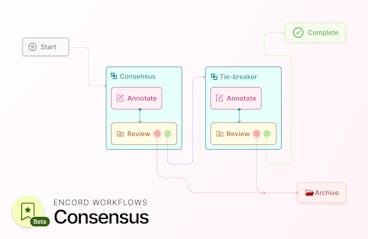

Announcing the launch of Consensus in Encord Workflows

At Encord, we continually obsess over how to support ML teams managing their labeling workflows and make it as easy as possible for teams to improve model performance. Today, we’re announcing the launch of Consensus workflows within Encord. What is Consensus? Consensus allows multiple annotators to conduct a labeling task of the same file in a mutually blind fashion — that is, each annotator is unaware that other annotators are working on the task. All submissions are aggregated into the following evaluation substage where designated Consensus reviewers can evaluate the agreement between labels and select a representative set. Integrating Consensus into your labeling workflows allows you to create higher-quality annotations by assessing the submissions of multiple annotators and simplifying compliance with domain-specific regulatory requirements. Support within Encord Support will begin with image and video modalities, with full modality support progressively released soon after. You can read more in our documentation for more information on activating this feature and building consensus workflows.

Apr 02 2024

2 M

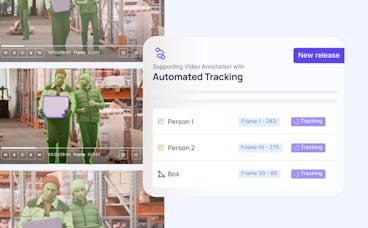

Announcing Auto-Segmentation Tracking For Video

In computer vision, where accurate training data is the lifeblood of successful models, video annotation plays an important role. However, annotating each frame individually is time-consuming and prone to inconsistencies. Nearby frames often exhibit visual similarities, and annotations made on one frame can be extrapolated to others. Enter automated polygon and bitmask tracking! Automated segmentation tracking significantly reduces annotation time, while simultaneously improving accuracy - gone are the days of tediously labeling every frame in a video. Polygon and Bitmask tracking provides the tooling required to build labeled training data at scale and at speed. Polygon tracking meticulously outlines objects with a series of interconnected vertices, offering precision and flexibility unparalleled in video annotation. Conversely, Bitmask tracking simplifies the annotation process by representing object masks as binary images, streamlining efficiency without compromising clarity. Join us as we explore these techniques that are not just enhancing the process of video annotation, but also paving the way for more accurate and efficient machine learning models. 🚀 Understanding Polygon and Bitmask Tracking Polygon Tracking A polygon is a geometric shape defined by a closed loop of straight-line segments. It can have three or more sides, forming a boundary around an area. In video annotation, polygons are used to outline objects of interest within frames. By connecting a series of vertices, we create a polygon that encapsulates the object’s shape. Advantages of Polygon-Based Tracking Accurate Boundary Representation: Polygons provide a precise representation of an object’s boundary. Unlike bounding boxes (which are rectangular and may not align perfectly with irregular shapes), polygons can closely follow the contours of complex objects. Flexibility: Polygons are versatile. They can adapt to various object shapes, including non-rectangular ones. Whether you’re tracking a car, a person, or an animal, polygons allow for flexibility in annotation. Use Cases of Polygon Tracking Object Segmentation: When segmenting objects from the background, polygons excel. For instance, in medical imaging, they help delineate tumors or organs. Motion Analysis: Tracking moving objects often involves polygon-based annotation. Analyzing the trajectory of a soccer ball during a match or monitoring pedestrian movement in surveillance videos are examples. Bitmask Tracking A bitmask is a binary image where each pixel corresponds to a specific object label. Instead of outlining the object’s boundary, bitmasks assign a unique value (usually an integer) to each pixel within the object region. These values act as identifiers, allowing pixel-level annotation. Advantages of Bitmask-Based Tracking Bitmasks enable precise delineation at the pixel level. By assigning values to individual pixels, we achieve accurate object boundaries. This is especially useful when dealing with intricate shapes or fine details. Use Cases of Bitmask Tracking Semantic Segmentation: In semantic segmentation tasks, where the goal is to classify each pixel into predefined classes (e.g., road, sky, trees), bitmasks play a vital role. They provide ground truth labels for training deep learning models. Instance Segmentation: For scenarios where multiple instances of the same object class appear in a frame (e.g., identifying individual cars in a traffic scene), bitmask tracking ensures each instance is uniquely labeled. Temporal Consistency Maintaining temporal consistency when annotating objects in a video is crucial. This means that the annotations for an object should be consistent from one frame to the next. Inconsistent annotations can lead to inaccurate results when the annotated data is used for training machine learning models. Temporal smoothing and interpolation techniques can be used to improve the consistency of the tracking. Temporal smoothing involves averaging the annotations over several frames to reduce the impact of any sudden changes. Interpolation, on the other hand, involves estimating the annotations for missing frames based on the annotations of surrounding frames. Both these techniques can greatly improve the quality and consistency Read the documentation, to know how to use interpolation in your annotation. Applications of Polygon and Bitmask Tracking Object Detection and Tracking With polygon tracking, objects of any shape can be accurately annotated, making it particularly useful for tracking objects that have irregular shapes or change shape over time. Bitmask tracking takes this a step further by marking each individual pixel, capturing even the smallest details of the object. This level of precision is crucial for detecting and tracking objects accurately within a video. Semantic Segmentation In semantic segmentation, the goal is to classify each pixel in the image to a particular class, making it a highly detailed task. Bitmask tracking, with its ability to mark each individual pixel, is perfectly suited for this task. It allows for the creation of highly accurate masks that can be used to train models for semantic segmentation. Polygon tracking can also be used for semantic segmentation, especially in scenarios where the objects being segmented have clear, defined boundaries. Interactive Video Editing Interactive video editing is a process where users can manipulate and modify video content. This involves tasks such as object removal, color grading, and adding special effects. Polygon and bitmask tracking can greatly enhance the process of interactive video editing. With these techniques, objects within the video can be accurately tracked and annotated, making it easier to apply edits consistently across multiple frames. This can lead to more seamless and high-quality edits, improving the overall video editing process. Semantic Context and Automation Semantic Context Scene Understanding: When placing polygons or bitmasks for video annotation, it’s crucial to consider the context of the scene. The semantics of the scene can guide accurate annotations. For instance, understanding the environment, the objects present, and their spatial relationships can help in placing more accurate and meaningful annotations. Object Relationships: The way objects interact within a scene significantly affects annotation choices. Interactions such as occlusion (where one object partially or fully hides another) and containment (where one object is inside another) need to be considered. Understanding these relationships can lead to more accurate and contextually relevant annotations. Automated Annotation Tool AI Assitance: With the advancement of machine learning models, we now have the capability to propose initial annotations automatically. These AI tools can significantly reduce the manual effort required in the annotation process. They can quickly analyze a video frame and suggest potential annotations based on learned patterns and features. Human Refinement: While AI tools can propose initial annotations, human annotators are still needed to refine these automated results for precision. Annotators can correct any inaccuracies and add nuances that the AI might have missed. This combination of AI assistance and human refinement leads to a more efficient and accurate video annotation process. Read the blog The Full Guide to Automated Data Annotation for more information. Real-World Applications Polygon and Bitmask tracking, along with the concepts of semantic context and automation, have a wide range of real-world applications. Here are a few key areas where they are making a significant impact: Medical Imaging: In medical imaging, precise annotation can mean the difference between a correct and incorrect diagnosis. These techniques allow for highly accurate segmentation of medical images, which can aid in identifying and diagnosing a wide range of medical conditions. Autonomous Vehicles: Polygon and Bitmask tracking allow these vehicles to understand their environment in great detail, helping them make better driving decisions. Video Surveillance: In video surveillance, tracking objects accurately over time is key to identifying potential security threats. These techniques can improve the accuracy and efficiency of video surveillance systems, making our environments safer. These are just a few examples of the many possible applications of Polygon and Bitmask tracking. As these techniques continue to evolve, they are set to revolutionize numerous industries and fields. In summary, Polygon and Bitmask tracking are transforming video annotation, paving the way for more precise machine learning models. As we continue to innovate in this space, we’re excited to announce that Encord will be releasing new features soon. Stay tuned for these updates and join us in exploring the future of computer vision with Encord. 🚀

Mar 22 2024

5 M

Validating Model Performance Using Encord Active

Model validation is a key machine learning (ML) lifecycle stage, ensuring models generalize well to new, unseen data. This process is critical for evaluating a model's predictions independently from its training dataset, thus testing its ability to perform reliably in the real world. Model validation helps identify overfitting—where a model learns noise rather than the signal in its training data—and underfitting, where a model is too simplistic to capture complex data patterns. Both are detrimental to model performance. Techniques like the holdout method, cross-validation, and bootstrapping are pivotal in validating model performance, offering insights into how models might perform on unseen data. These methods are integral to deploying AI and machine learning models that are both reliable and accurate. This article delves into two parts: Key model validation techniques, the advantages of a data-centric approach, and how to select the most appropriate validation method for your project. How to validate a Mask R-CNN pre-trained model that segments instances in COVID-19 scans using Encord Active, a data-centric platform for evaluating and validating computer vision (CV) models. Ready to dive deeper into model validation and discover how Encord Active can enhance your ML projects? Let’s dive in! The Vital Role of a Data-Centric Approach in Model Validation A data-centric approach to model validation places importance on the quality of data in training and deploying computer vision (CV) and artificial intelligence (AI) models. The approach recognizes that the foundation of any robust AI system lies not in the complexity of its algorithms but in the quality of the data it learns from. High-quality, accurately labeled data (with ground truth) ensures that models can truly understand and interpret the nuances of the tasks they are designed to perform, from predictive analytics to real-time decision-making processes. Why Data Quality is Paramount The quality of training data is directly proportional to a model's ability to generalize from training to real-world applications. Poor data quality—including inaccuracies, biases, label errors, and incompleteness—leads to models that are unreliable, biased, or incapable of making accurate predictions. A data-centric approach prioritizes meticulous data preparation, including thorough data annotation, cleaning, and validation. This ensures the data distribution truly reflects the real world it aims to model and reduces label errors. Improving Your Model’s Reliability Through Data Quality The reliability of CV models—and even more recently, foundation models—in critical applications—such as healthcare imaging and autonomous driving—cannot be overstated. A data-centric approach mitigates the risks associated with model failure by ensuring the data has high fidelity. It involves rigorous validation checks and balances, using your expertise and automated data quality tools to continually improve your label quality and datasets. Adopt a data-centric approach to your AI project and unlock its potential by downloading our whitepaper. Key Computer Vision Model Validation Techniques A data-centric approach is needed to validate computer vision models after model training that looks at more than just performance and generalizability. They also need to consider the unique problems of visual data, like how image quality, lighting, and perspectives can vary. Tailoring the common validation techniques specifically for computer vision is about robustly evaluating the model's ability to analyze visual information and embeddings across diverse scenarios: Out-of-Sample Validation: Essential for verifying that a CV model can generalize from its training data to new, unseen images or video streams. This approach tests the model's ability to handle variations in image quality, lighting, and subject positioning that it hasn't encountered during training. Cross-Validation and Stratified K-Fold: Particularly valuable in computer vision is ensuring that every aspect of the visual data is represented in both training and validation sets. Stratified K-Fold is beneficial when dealing with imbalanced datasets, common in computer vision tasks, to maintain an equal representation of classes across folds. Leave-One-Out Cross-Validation (LOOCV): While computationally intensive, LOOCV can be particularly insightful for small image datasets where every data point's inclusion is crucial for assessing the model's performance on highly nuanced visual tasks. Bootstrapping: Offers insights into the stability of model predictions across different visual contexts. This method helps understand how training data subset changes can affect the model's performance, which is particularly relevant for models expected to operate in highly variable visual environments. Adversarial Testing: Tests the model's resilience against slight, often invisible, image changes. This technique is critical to ensuring models are not easily perturbed by minor alterations that would not affect human perception. Domain-Specific Benchmarks: Participating in domain-specific challenges offered by ImageNet, COCO, or PASCAL VOC can be a reliable validation technique. These benchmarks provide standardized datasets and metrics, allowing for evaluating a model's performance against a wide range of visual tasks and conditions, ensuring it meets industry standards. Human-in-the-Loop: Involving domain experts in the validation process is invaluable, especially for tasks requiring fine-grained visual distinctions (e.g., medical imaging or facial recognition). This approach helps ensure that the model's interpretations align with human expertise and can handle the subtleties of real-world visual data. Ensuring a model can reliably interpret and analyze visual information across various conditions requires a careful balance between automated validation methods and human expertise. Choosing the right validation techniques for CV models involves considering the dataset's diversity, the computational resources available, and the application's specific requirements. Luckily, there are model validation tools that can help you focus on validating the model. At the same time, they do the heavy lifting of providing the insights necessary to validate your CV model’s performance, including providing AI-assisted evaluation features. But before walking through Encord Active, let’s understand the factors you need to consider for choosing the right tool. How to Choose the Right Computer Vision Model Validation Tool When choosing the right model validation tool for computer vision projects, several key factors come into play, each addressing the unique challenges and requirements of working with image data. These considerations ensure that the selected tool accurately evaluates the model's performance and aligns with the project's specific demands. Here's a streamlined guide to making an informed choice: Data Specificity and Complexity: Opt for tools that cater to the variability and complexity inherent in image data. This means capabilities for handling image-specific metrics such as Intersection over Union (IoU) for object detection and Mean Absolute Error (MAE) for tasks like classification and segmentation are crucial. Robust Data Validation: The tool should adeptly manage image data peculiarities, including potential discrepancies between image annotations and the actual images. Look for features that support comprehensive data validation across various stages of the model development cycle, including pre-training checks and ongoing training validations. Comprehensive Evaluation Metrics: Essential for thoroughly assessing a computer vision model's performance. The tool should offer a wide array of metrics, including precision-recall curves, ROC curves, and confusion matrices for classification, alongside task-specific metrics like IoU for object detection. It should also support quality metrics for a more holistic, real-world evaluation. Versatile Performance Evaluation: It should support a broad spectrum of evaluation techniques for deep insights into accuracy, the balance between precision and recall, and the model’s ability to distinguish between different classes. Dataset Management: The validation tool should help with efficient dataset handling for proper training-validation splits. For the sake of performance and scale, it should be able to manage large datasets. Flexibility and Customization: The fast-paced nature of computer vision demands tools that allow for customization and flexibility. This includes introducing custom metrics, supporting various data types and model architectures, and adapting to specific preprocessing and integration needs. Considering those factors, you can select a validation tool (open-source toolkits, platforms, etc.) that meets your project's requirements and contributes to developing reliable models. Using Encord Active to Validate the Performance of Your Computer Vision Model Encord Active (EA) is a data-centric model validation solution that enables you to curate valuable data that can truly validate your model’s real-world generalizability through quality metrics. In this section, you will see how you can analyze the performance of a pre-trained Mask R-CNN object detection model with Encord Active on COVID-19 predictions. From the analysis results, you will be able to validate and, if necessary, debug your model's performance. This walkthrough uses Encord Annotate to create a project and import the dataset. We use Encord Active Cloud to analyze the model’s failure modes. We recommend you sign up for an Encord account to follow this guide. Import Predictions Import your predictions onto the platform. Learn how to import Predictions in the documentation. Select the Prediction Set you just uploaded, and Encord Active will use quality data, label, and model quality metrics to evaluate the performance of your model: Visualize Model Performance Summary on the Validation Set Evaluate the model’s performance by inspecting the Model Summary dashboard to get an overview of your model’s performance on the validation set with details error categorization (true positive vs. false positive vs. false negative), the F1 score, and mean average precision/recall based on a confidence (IoU) threshold: Manually Inspect the Model Results Beyond visualizing a summary of the model’s performance, using a tool that allows you to manually dig in and inspect how your model works on real-world samples is more than helpful. Encord Active provides an Explorer tab that enables you to filter models by metrics to observe the impact of metrics on real-world samples. EA’s data-centric build also lets you see how your model correctly or incorrectly makes predictions (detects, classifies, or segments) on the training, validation, and production samples. Let’s see how you can achieve this: On the Model Summary dashboard, → Click True Positive Count metric to inspect the predictions your model got right: Click on one of the images using the expansion icon to see how well the model detects the class, the confidence score with which it predicts the object, other scores on performance metrics, and metadata. Still under the Explorer tab → Click on Overview (the tab on the right) → Click on False Positive Count to inspect instances that the model failed to detect correctly It seems most classes flagged as False Positives are due to poor object classification quality (the annotations are not 100% accurate). Let’s look closely at an instance: In that instance, the model correctly predicts that the object is ‘Cardiomediastinum’. Still, the second overlapping annotation has a broken track for some reason, so Encord Active classifies its prediction as false positive using a combination of Broken Object Track and other relevant quality metrics. Under Filter → Add filter, you will see parameters and attributes to filter your model’s performance. For example, if you added your validation set to Active through Annotate, you can validate your model’s performance on that set and, likewise, on the production set. Visualize the Impact of Metrics on Model Performance Evaluate the model outcome count to understand the distribution of the correct and incorrect results for each class. Under the Model Evaluation tab → Click on Outcome to see the distribution chart: Now, you should see the count for the number of predictions the model gets wrong. Using this chart, you can get a high-level perspective on the issues with your model. In this case, the model fails to segment the ‘Airways’ object in the instances correctly. The Intersection-of-Union (IoU) Threshold is 0.5, the threshold for the model’s confidence in its predictions. Use the IOU Threshold slider under the Overview tab to see the outcome count based on a higher or lower threshold. You can also select specific classes you want to inspect under the Classes option. Dig Deeper into the Metrics Once you understand the model outcome count, you can dig deeper into specific metrics like precision, recall, and F1 scores if they are relevant to your targets. Notice the low precision, recall, and F1 scores per class! Also, group the scores by the model outcome count to understand how the model performs in each class. You could also use the precision-recall curve to analyze and highlight the classes harder for the model to detect with high confidence. Also break down the model’s precision and recall values for the predictions of each object over the relevant metrics you want to investigate. For example, if you want to see the precision and recall by the Object Classification Quality metric, under Metric Performance → Select the Metric dropdown menu, and then the metric you want to investigate the model’s precision by: Validate the Model’s Performance on Business Criteria Now it’s time to see the metrics impacting the model’s performance the most and determine, based on your information, if it’s good or bad (needs debugging) for business. For instance, if the Confidence scores are the least performing metrics, you might be worried that your vision model is naive in predictions given the previous consensus on the outcome count (false positives and negatives). Here is the case for this model under the Metric Performance dashboard (remember, you can use the IoU Threshold slider to check the metric impact at different confidence intervals): The Relevative Area (the object's size) significantly influences our model’s performance. Considering the business environment you want to deploy the model, would this be a good or bad event? This is up to you to decide based on your technical and business requirements. If the model does not work, you can run more experiments and train more models until you find the optimal one. Awesome! You have seen how Encord Active plays a key role in providing features for validating your model’s performance with built-in metrics. In addition, it natively integrates with Encord Annotate, an annotation tool, to facilitate data quality improvement that can enhance the performance of your models. Conclusion Selecting the right model validation tools ensures that models perform accurately and efficiently. It involves the assessment of a model's performance through quantitative metrics such as the IoU, mAP (mean Average Precision), and MaE, or qualitatively, by subject matter experts. The choice of evaluation metric should align with the business objectives the model aims to achieve. Furthermore, model selection hinges on comparing various models using these metrics within a carefully chosen evaluation schema, emphasizing the importance of a proper validation strategy to ensure robust model performance before deployment. Validating model performance is particularly vital in sectors where such inaccuracies could compromise safety. Check out our customer stories to learn from large and small teams that have improved their data quality and model performance with the help of Encord. Platforms like Encord, which specialize in improving data and model quality, are instrumental in this context. Encord Active, among others, provides features designed to refine data quality and bolster model accuracy, mitigating the risks associated with erroneous predictions or data analysis.

Mar 02 2024

8 M

Comparative Analysis of YOLOv9 and YOLOv8 Using Custom Dataset on Encord Active

Even as foundation models gain popularity, advancements in object detection models remain significant. YOLO has consistently been the preferred choice in machine learning for object detection. Let’s train the latest iterations of the YOLO series, YOLOv9, and YOLOV8 on a custom dataset and compare their model performance. In this blog, we will train YOLOv9 and YOLOv8 on the xView3 dataset. The xView3 dataset contains aerial imagery with annotations for maritime object detection, making it an ideal choice for evaluating the robustness and generalization capabilities of object detection models. If you wish to curate and annotate your own dataset for a direct comparison between the two models, you have the option to create the dataset using Encord Annotate. Once annotated, you can seamlessly follow the provided code to train and evaluate both YOLOv9 and YOLOv8 on your custom dataset. Read the Encord Annotate Documentation to get started with your annotation project. Prerequisites We are going to run our experiment on Google Colab. So if you are doing it on your local system, please bear in mind that the instructions and the code was made to run on Colab Notebook. Make sure you have access to GPU. You can either run the command below or navigate to Edit → Notebook settings → Hardware accelerator, set it to GPU, and the click Save. !nvidia-smi To make it easier to manage datasets, images, and models we create a HOME constant. import os HOME = os.getcwd() print(HOME) Train YOLOv9 on Encord Dataset Install YOLOv9 !git clone https://github.com/SkalskiP/yolov9.git %cd yolov9 !pip install -r requirements.txt -q !pip install -q roboflow encord av # This is a convenience class that holds the info about Encord projects and makes everything easier. # The class supports bounding boxes and polygons across both images, image groups, and videos. !wget 'https://gist.githubusercontent.com/frederik-encord/e3e469d4062a24589fcab4b816b0d6ec/raw/fa0bfb0f1c47db3497d281bd90dd2b8b471230d9/encord_to_roboflow_v1.py' -O encord_to_roboflow_v1.py Imports from typing import Literal from pathlib import Path from IPython.display import Image import roboflow from encord import EncordUserClient from encord_to_roboflow_v1 import ProjectConverter Data Preparation Set up access to the Encord platform by creating and using an SSH key. # Create ssh-key-path key_path = Path("../colab_key.pub") if not key_path.is_file(): !ssh-keygen -t ed25519 -f ../colab_key -N "" -q key_content = key_path.read_text() We will now retrieve the data from Encord, converting it to the format required by Yolo and storing it on disk. It's important to note that for larger projects, this process may encounter difficulties related to disk space. The converter will automatically split your dataset into training, validation, and testing sets based on the specified sizes. # Directory for images data_path = Path("../data") data_path.mkdir(exist_ok=True) client = EncordUserClient.create_with_ssh_private_key( Path("../colab_key").resolve().read_text() ) project_hash = "9ca5fc34-d26f-450f-b657-89ccb4fe2027" # xView3 tiny encord_project = client.get_project(project_hash) converter = ProjectConverter( encord_project, data_path, ) dataset_yaml_file = converter.do_it(batch_size=500, splits={"train": 0.5, "val": 0.1, "test": 0.4}) encord_project_title = converter.title Download Model Weight We will download the YOLOv9-e and the gelan-c weights. Although the YOLOv9 paper mentions versions yolov9-s and yolov9-m, it's worth noting that weights for these models are currently unavailable in the YOLOv9 repository. !mkdir -p {HOME}/weights !wget -q https://github.com/WongKinYiu/yolov9/releases/download/v0.1/yolov9-e-converted.pt -O {HOME}/weights/yolov9-e.pt !wget -P {HOME}/weights -q https://github.com/WongKinYiu/yolov9/releases/download/v0.1/gelan-c.pt You can predict and evaluate the results of object detection with the YOLOv9 weights pre-trained on COCO model. Check out the blog YOLOv9 Explained and How to Run it if you want to run object detection on pre-trained YOLOv9 weights. Train Custom YOLOv9 Model for Object Detection We train a custom YOLOv9 model from a pre-trained gelan-c model. !python train.py \ --batch 8 --epochs 20 --img 640 --device 0 --min-items 0 --close-mosaic 15 \ --data $dataset_yaml_file \ --weights {HOME}/weights/gelan-c.pt \ --cfg models/detect/gelan-c.yaml \ --hyp hyp.scratch-high.yaml You can examine and validate your training results. The code for validation and inference with the custom model is available on Colab Notebook. Here we will focus on comparing the model performances. Converting Custom YOLOv9 Model Predictions to Encord Active Format pth = converter.create_encord_json_predictions(get_latest_exp("detect") / "labels", Path.cwd().parent) print(f"Predictions exported to {pth}") Download the predictions on your local computer and upload them via the UI to Encord Active for analysis of your results. Moving on to training YOLOv8! Train YOLOv8 on Encord Dataset Install YOLOv8 !pip install ultralytics==8.0.196 from IPython import display display.clear_output() import ultralytics ultralytics.checks() Dataset Preparation As we are doing a comparative analysis of two models, we will use the same dataset to train YOLOv8. Train Custom YOLOv8 Model for Object Detection from ultralytics import YOLO model = YOLO('yolov8n.pt') # load a pretrained YOLOv8n detection model model.train(data=dataset_yaml_file.as_posix(), epochs=20) # train the model model.predict() The code for running inference on the test dataset is available on the Colab Notebook shared below. Converting Custom YOLOv8 Model Predictions to Encord Active Format pth = converter.create_encord_json_predictions(get_latest_exp("detect", ext="predict") / "labels", Path.cwd().parent) print(f"Predictions exported to {pth}") Download this JSON file and upload it to Encord Active via UI. Comparative Analysis on Encord Active On Encord Active under the tab Model Evaluation, you can compare both the model’s predictions. You can conveniently navigate to the Model Summary tab to view the Mean Average Precision (mAP), Mean Average Recall (mAR), and F1 score for both models. Additionally, you can compare the differences in predictions between YOLOv8 and YOLOv9. Precision YOLOv8 may excel in correctly identifying objects (high true positive count) but at the risk of also detecting objects that aren't present (high false positive count). On the other hand, YOLOv9 may be more conservative in its detections (lower false positive count) but could potentially miss some instances of objects (higher false negative count). Recall In terms of recall, YOLOv8 exhibits superior performance with a higher true positive count (101) compared to YOLOv9 (43), indicating its ability to correctly identify more instances of objects present in the dataset. Both models, however, show an equal count of false positives (643), suggesting similar levels of incorrect identifications of non-existent objects. YOLOv8 demonstrates a lower false negative count (1261) compared to YOLOv9 (1315), implying that YOLOv8 misses fewer instances of actual objects, highlighting its advantage in recall performance. Precision-Recall Curve Based on the observed precision-recall curves, it appears that YOLOv8 achieves a higher Area Under the Curve (AUC-PR) value compared to YOLOv9. This indicates that YOLOv8 generally performs better in terms of both precision and recall across different threshold values, capturing a higher proportion of true positives while minimizing false positives more effectively than YOLOv9. Precision-Recall Curve is not the only metric to evaluate the performance of models. There are other metrics like F1 score, IOU distribution, etc. For more information on different quality metrics, read the blog Data, Label, & Model Quality Metrics in Encord. Metric Correlation The metric impact on performance in Encord refers to how specific metrics influence the performance of your model. Encord allows you to figure out which metrics have the most influence on your model's performance. This metric tells us whether a positive change in a metric will lead to a positive change (positive correlation) or a negative change (negative correlation) in model performance. The dimensions of the labeled objects significantly influence the performance of both models. This underscores the importance of the size of objects in the dataset. It's possible that the YOLOv9 model's performance is adversely affected by the presence of smaller objects in the dataset, leading to its comparatively poorer performance. Metric Performance The Metric Performance in model evaluation in Encord provides a detailed view of how a specific metric affects the performance of your model. It allows you to understand the relationship between a particular metric and the model's performance. In conclusion, the comparison between YOLOv8 and YOLOv9 on Encord Active highlights distinct performance characteristics in terms of precision and recall. While YOLOv8 excels in correctly identifying objects with a higher true positive count, it also exhibits a higher false positive count, indicating a potential for over-detection. On the other hand, YOLOv9 demonstrates a lower false positive count but may miss some instances of objects due to its higher false negative count. If you want to improve your object detection model, read the blog How to Analyze Failure Modes of Object Detection Models for Debugging for more information. The precision-recall curve analysis suggests that YOLOv8 generally outperforms YOLOv9, capturing a higher proportion of true positives while minimizing false positives more effectively. However, it's important to consider other metrics like F1 score and IOU distribution for a comprehensive evaluation of model performance. Moreover, understanding the impact of labeled object dimensions and specific metric correlations can provide valuable insights into improving model performance on Encord Active.

Mar 01 2024

8 M

How to Analyze Failure Modes of Object Detection Models for Debugging