Contents

What is the Segment Anything Model (SAM)?

What is Model Fine-Tuning?

Why Would I Fine-Tune a Model?

How to Fine-Tune Segment Anything Model [With Code]

Fine-Tuning for Downstream Applications

Conclusion

Encord Blog

How To Fine-Tune Segment Anything

Contents

What is the Segment Anything Model (SAM)?

What is Model Fine-Tuning?

Why Would I Fine-Tune a Model?

How to Fine-Tune Segment Anything Model [With Code]

Fine-Tuning for Downstream Applications

Conclusion

Written by

Alexandre Bonnet

View more postsComputer vision is having its ChatGPT moment with the release of the Segment Anything Model (SAM) by Meta last week. Trained over 11 billion segmentation masks, SAM is a foundation model for predictive AI use cases rather than generative AI. While it has shown an incredible amount of flexibility in its ability to segment over wide-ranging image modalities and problem spaces, it was released without “fine-tuning” functionality.

This tutorial will outline some of the key steps to fine-tune SAM using the mask decoder, particularly describing which functions from SAM to use to pre/post-process the data so that it's in good shape for fine-tuning.

What is the Segment Anything Model (SAM)?

The Segment Anything Model (SAM) is a segmentation model developed by Meta AI. It is considered the first foundational model for Computer Vision. SAM was trained on a huge corpus of data containing millions of images and billions of masks, making it extremely powerful. As its name suggests, SAM is able to produce accurate segmentation masks for a wide variety of images. SAM’s design allows it to take human prompts into account, making it particularly powerful for Human In The Loop annotation. These prompts can be multi-modal: they can be points on the area to be segmented, a bounding box around the object to be segmented, or a text prompt about what should be segmented.

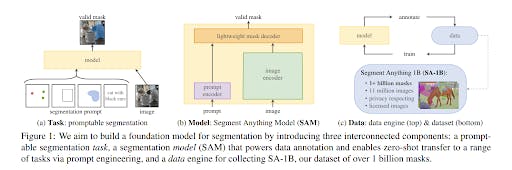

The model is structured into 3 components: an image encoder, a prompt encoder, and a mask decoder.

The image encoder generates an embedding for the image being segmented, whilst the prompt encoder generates an embedding for the prompts. The image encoder is a particularly large component of the model. This is in contrast to the lightweight mask decoder, which predicts segmentation masks based on the embeddings. Meta AI has made the weights and biases of the model trained on the Segment Anything 1 Billion Mask (SA-1B) dataset available as a model checkpoint.

Learn more about how Segment Anything works in our explainer blog post Segment Anything Model (SAM) Explained.

Learn more about how Segment Anything works in our explainer blog post Segment Anything Model (SAM) Explained.What is Model Fine-Tuning?

Publicly available state-of-the-art models have a custom architecture and are typically supplied with pre-trained model weights. If these architectures were supplied without weights then the models would need to be trained from scratch by the users, who would need to use massive datasets to obtain state-of-the-art performance.

Model fine-tuning is the process of taking a pre-trained model (architecture+weights) and showing it data for a particular use case. This will typically be data that the model hasn’t seen before, or that is underrepresented in its original training dataset.

The difference between fine-tuning the model and starting from scratch is the starting value of the weights and biases. If we were training from scratch, these would be randomly initialized according to some strategy. In such a starting configuration, the model would ‘know nothing’ of the task at hand and perform poorly. By using pre-existing weights and biases as a starting point we can ‘fine tune’ the weights and biases so that our model works better on our custom dataset. For example, the information learned to recognize cats (edge detection, counting paws) will be useful for recognizing dogs.

Why Would I Fine-Tune a Model?

The purpose of fine-tuning a model is to obtain higher performance on data that the pre-trained model has not seen before. For example, an image segmentation model trained on a broad corpus of data gathered from phone cameras will have mostly seen images from a horizontal perspective.

If we tried to use this model for satellite imagery taken from a vertical perspective, it may not perform as well. If we were trying to segment rooftops, the model may not yield the best results. The pre-training is useful because the model will have learned how to segment objects in general, so we want to take advantage of this starting point to build a model that can accurately segment rooftops. Furthermore, it is likely that our custom dataset would not have millions of examples, so we want to fine-tune instead of training the model from scratch.

Fine tuning is desirable so that we can obtain better performance on our specific use case, without having to incur the computational cost of training a model from scratch.

How to Fine-Tune Segment Anything Model [With Code]

Background & Architecture

We gave an overview of the SAM architecture in the introduction section. The image encoder has a complex architecture with many parameters. In order to fine-tune the model, it makes sense for us to focus on the mask decoder which is lightweight and therefore easier, faster, and more memory efficient to fine-tune.

In order to fine-tune SAM, we need to extract the underlying pieces of its architecture (image and prompt encoders, mask decoder). We cannot use SamPredictor.predict (link) for two reasons:

- We want to fine-tune only the mask decoder

- This function calls SamPredictor.predict_torch which has the @torch.no_grad() decorator (link), which prevents us from computing gradients

Thus, we need to examine the SamPredictor.predict function and call the appropriate functions with gradient calculation enabled on the part we want to fine-tune (the mask decoder). Doing this is also a good way to learn more about how SAM works.

Creating a Custom Dataset

We need three things to fine-tune our model:

- Images on which to draw segmentations

- Segmentation ground truth masks

- Prompts to feed into the model

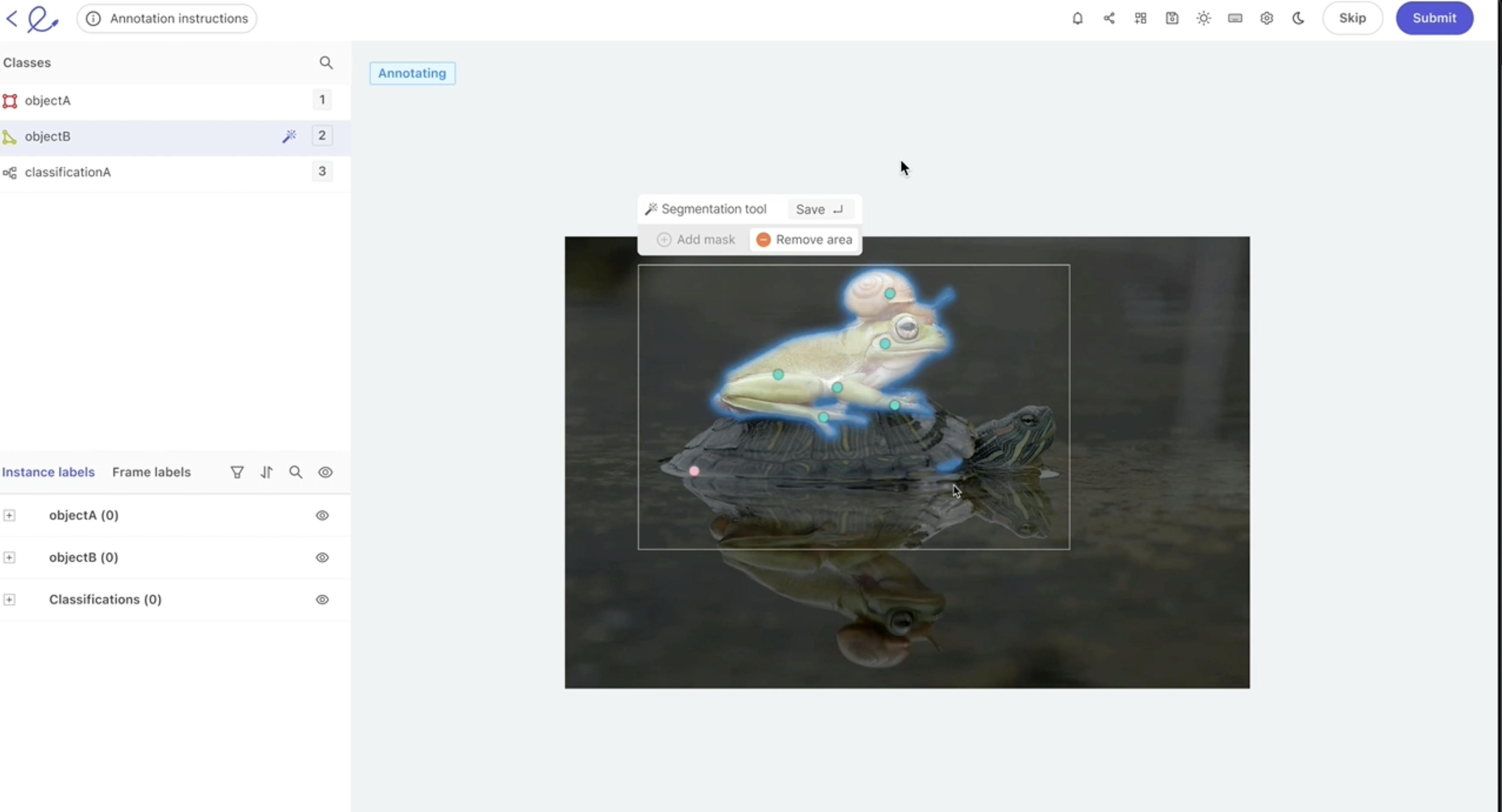

We chose the stamp verification dataset (link) since it has data that SAM may not have seen in its training (i.e., stamps on documents). We can verify that it performs well, but not perfectly, on this dataset by running inference with the pre-trained weights. The ground truth masks are also extremely precise, which will allow us to calculate accurate losses. Finally, this dataset contains bounding boxes around the segmentation masks, which we can use as prompts to SAM. An example image is shown below. These bounding boxes align well with the workflow that a human annotator would go through when looking to generate segmentations.

Input Data Preprocessing

We need to preprocess the scans from numpy arrays to pytorch tensors. To do this, we can follow what happens inside SamPredictor.set_image (link) and SamPredictor.set_torch_image (link) which preprocesses the image. First, we can use utils.transform.ResizeLongestSide to resize the image, as this is the transformer used inside the predictor (link). We can then convert the image to a pytorch tensor and use the SAM preprocess method (link) to finish preprocessing.

Training Setup

We download the model checkpoint for the vit_b model and load them in:

sam_model = sam_model_registry['vit_b'](checkpoint='sam_vit_b_01ec64.pth')

We can set up an Adam optimizer with defaults and specify that the parameters to tune are those of the mask decoder:

optimizer = torch.optim.Adam(sam_model.mask_decoder.parameters())

At the same time, we can set up our loss function, for example Mean Squared Error

loss_fn = torch.nn.MSELoss()

Training Loop

In the main training loop, we will be iterating through our data items, generating masks, and comparing them to our ground truth masks so that we can optimize the model parameters based on the loss function.

In this example, we used a GPU for training since it is much faster than using a CPU. It is important to use .to(device) on the appropriate tensors to make sure that we don’t have certain tensors on the CPU and others on the GPU.

We want to embed images by wrapping the encoder in the torch.no_grad() context manager, since otherwise we will have memory issues, along with the fact that we are not looking to fine-tune the image encoder.

with torch.no_grad(): image_embedding = sam_model.image_encoder(input_image)

We can also generate the prompt embeddings within the no_grad context manager. We use our bounding box coordinates, converted to pytorch tensors.

with torch.no_grad():

sparse_embeddings, dense_embeddings = sam_model.prompt_encoder(

points=None,

boxes=box_torch,

masks=None,

)Finally, we can generate the masks. Note that here we are in single mask generation mode (in contrast to the 3 masks that are normally output).

low_res_masks, iou_predictions = sam_model.mask_decoder( image_embeddings=image_embedding, image_pe=sam_model.prompt_encoder.get_dense_pe(), sparse_prompt_embeddings=sparse_embeddings, dense_prompt_embeddings=dense_embeddings, multimask_output=False, )

The final step here is to upscale the masks back to the original image size since they are low resolution. We can use Sam.postprocess_masks to achieve this. We will also want to generate binary masks from the predicted masks so that we can compare these to our ground truths. It is important to use torch functionals in order to not break backpropagation.

upscaled_masks = sam_model.postprocess_masks(low_res_masks, input_size, original_image_size).to(device) from torch.nn.functional import threshold, normalize binary_mask = normalize(threshold(upscaled_masks, 0.0, 0)).to(device)

Finally, we can calculate the loss and run an optimization step:

loss = loss_fn(binary_mask, gt_binary_mask) optimizer.zero_grad() loss.backward() optimizer.step()

By repeating this over a number of epochs and batches we can fine-tune the SAM decoder.

Saving Checkpoints and Starting a Model from it

Once we are done with training and satisfied with the performance uplift, we can save the state dict of the tuned model using:

torch.save(model.state_dict(), PATH)

We can then load this state dict when we want to perform inference on data that is similar to the data we used to fine-tune the model.

You can find the Colab Notebook with all the code you need to fine-tune SAM here. Keep reading if you want a fully working solution out of the box!

You can find the Colab Notebook with all the code you need to fine-tune SAM here. Keep reading if you want a fully working solution out of the box!Fine-Tuning for Downstream Applications

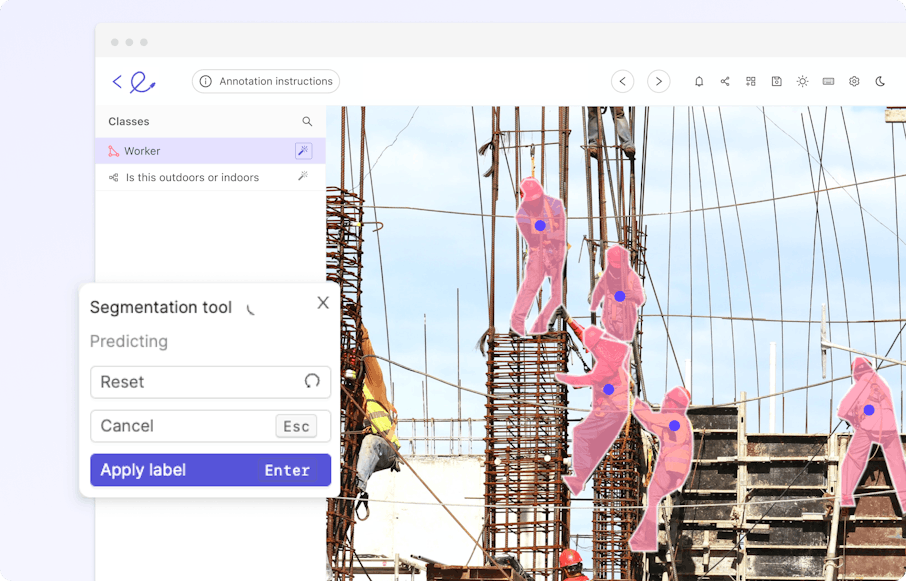

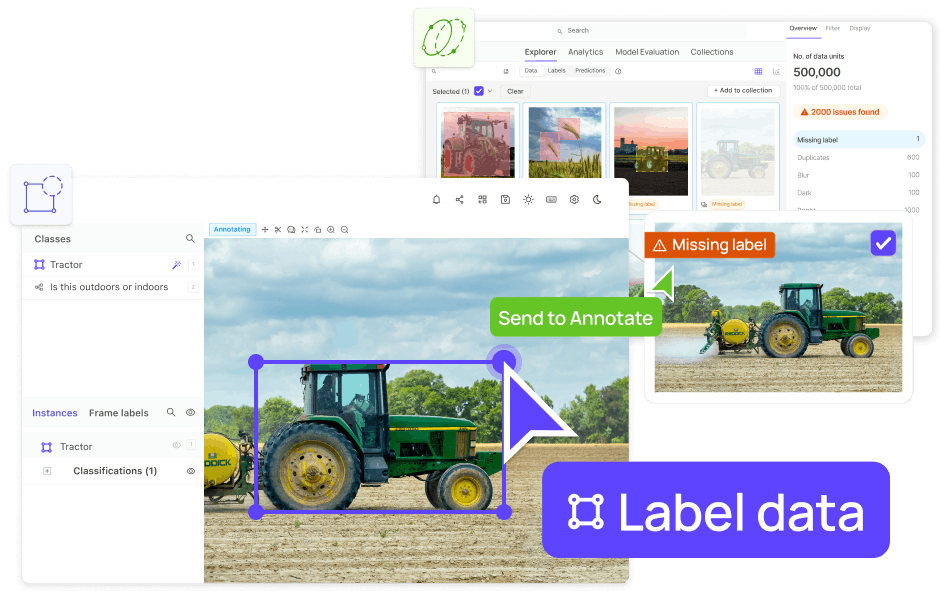

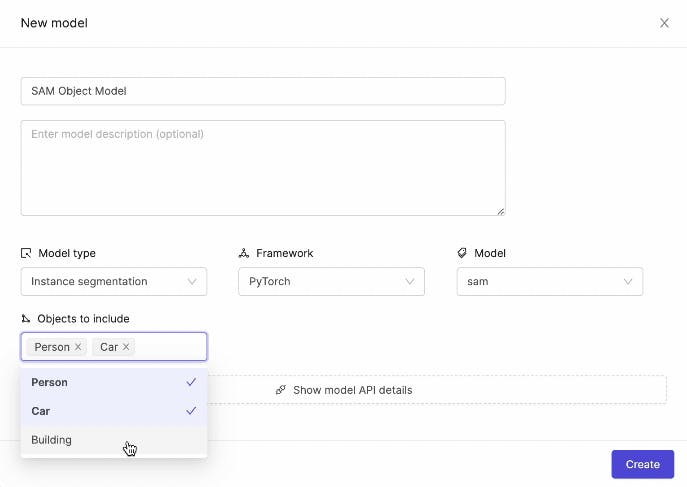

While SAM does not currently offer fine-tuning out of the box, we are building a custom fine-tuner integrated with the Encord platform. As shown in this post, we fine-tune the decoder in order to achieve this. This is available as an out-of-the-box one-click procedure in the web app, where the hyperparameters are automatically set.

Original vanilla SAM mask:

Mask generated by fine-tuned version of the model:

We can see that this mask is tighter than the original mask. This was the result of fine-tuning on a small subset of images from the stamp verification dataset, and then running the tuned model on a previously unseen example. With further training and more examples, we could obtain even better results.

🔥 NEW RELEASE: We released TTI-Eval (text-to-image evaluation), an open-source library for evaluating zero-shot classification models like CLIP and domain-specific ones like BioCLIP against your (or HF) datasets to estimate how well the model will perform. Get started with it on GitHub, and do ⭐️ the repo if it's awesome. 🔥

🔥 NEW RELEASE: We released TTI-Eval (text-to-image evaluation), an open-source library for evaluating zero-shot classification models like CLIP and domain-specific ones like BioCLIP against your (or HF) datasets to estimate how well the model will perform. Get started with it on GitHub, and do ⭐️ the repo if it's awesome. 🔥Conclusion

That's all, folks!

You have now learned how to fine-tune the Segment Anything Model (SAM). If you're looking to fine-tune SAM out of the box, you might also be interested to learn that we have recently released the Segment Anything Model in Encord, allowing you to fine-tune the model without writing any code.

Build better ML models with Encord

Get started todayWritten by

Alexandre Bonnet

View more posts- Yes, SAM can be fine-tuned to adapt its performance to specific tasks or datasets, enhancing its capabilities and accuracy.

- Fine-tuning in generative AI involves adjusting pre-trained models on new data, refining their parameters to improve performance for specific tasks.

- Fine-tuning can be challenging due to the need for carefully chosen hyperparameters and potential overfitting to the training data.

- Implementing a Segment Anything model involves preparing data (dataset used for training SA 1B is also available), selecting the model checkpoints available in the GitHub, fine-tuning on specific tasks, and evaluating performance metrics.

- SAM (Segment Anything Model) is a versatile deep learning model designed for image segmentation tasks. Trained on the SA-1B dataset, featuring over 1 billion masks on 11M images, SAM excels in zero-shot transfers to new tasks and distributions.

- Segmentation provides detailed object boundaries, offering precise localization compared to detection, which identifies objects but lacks boundary information, making segmentation more suitable for tasks requiring accuracy in object delineation.

- Utilize the SAM model by fine-tuning it on relevant data for specific segmentation tasks, ensuring proper evaluation and adjustment to achieve desired performance levels.

Related blogs

How To Use Encord’s Bitmask Brush Tool

In machine learning, precise image annotation is crucial for training accurate and reliable models. Encord's Bitmask brush tool revolutionizes the annotation process by allowing interactive and fine-grained selection of regions of interest within images. Designed to cater to the needs of machine learning practitioners, this comprehensive guide will walk you through the ins and outs of utilizing Encord's Bitmask brush tool, empowering you to create precise and highly accurate annotations within the Encord platform. What is the bit mask brush? A bit mask brush allows you to interactively define regions or areas of interest within an image by "brushing" over them. As you paint or brush over the image, the bit mask brush assigns specific ‘bits’ or values to the corresponding pixels or regions you select. These bits represent the labels or categories associated with the selected areas. Accessing brush tool: Click on 🖌️ or press ‘f’ For example, if you are labeling outlines of blood vessels in an image, you can use a bit of mask brush to brush over the pixels corresponding to the vessel’s boundaries. The bit mask brush would assign a specific value or bit pattern to those pixels, indicating that they belong to the vessel class or category. Similarly, if you are labeling topologically separate regions belonging to the same frame classification, you can use a bitmask brush to assign different bit patterns or values to the regions you select. This allows you to differentiate between regions or segments within the same frame category. Using the Bitmask Brush The Bitmask brush is a powerful tool for creating annotations or labels by selecting specific regions within an image, providing flexibility and control over the labeling process. Let’s explore its key functionalities: Selection and Size Adjustment When the Bitmask annotation type is selected, the brush tool is automatically chosen by default. You can access it by clicking the brush icon or pressing the 'f' key, and you are able to adjust the brush size using a convenient slider. This enables you to tailor the brush size to the level of detail needed for your annotations. Annotation Creation Once you have adjusted the brush size, you can begin annotating your image by selecting the desired areas. As you brush over the regions, the Bitmask brush assigns specific bit patterns or values to the corresponding pixels, indicating their association with the selected labels or categories. Apply Label Once your annotation is complete, you can apply the label by clicking the "Apply label" button or pressing the Enter key, finalizing the annotation and incorporating it into the labeling or annotation process. 💡To use the bitmap masks, the ontology should contain the Bitmask annotation type. Eraser The Eraser tool provides the ability to erase parts or the entirety of your bitmask selection. This can be useful if you need to refine or modify your annotations before applying the final label. You can access the Eraser tool by clicking the eraser icon or pressing the 'h' key on your keyboard while the popup window is open. Accessing eraser tool: Click on eraser icon or press ‘h’ Threshold Brush The Threshold brush, specific to DICOM images, offers additional functionality by enabling you to set an intensity value threshold for your labels. The preview toggle allows you to visualize which parts of the image correspond to your set threshold, helping you determine the areas that will be labeled when covered by the Threshold brush. To access the Threshold brush, click the corresponding icon or press the 'g' key while the popup window is open. Adjust the brush size and the range of intensity values using the sliders in the popup. Accessing threshold tool: Click on the corresponding icon or press ‘g’ With the Encord Bitmask SDK The Encord Bitmask SDK empowers you to effortlessly generate, modify, and analyze annotations within the Encord platform, leveraging the vast capabilities of Python's comprehensive libraries and tools to their fullest extent. Find more details in the bitmask documentation. To conclude, Encord’s Bitmask brush tool, equipped with its diverse range of features, offers an intuitive and flexible solution for creating annotations within the Encord platform. Harnessing the power of the Bitmask brush and the Encord Bitmask SDK, you can elevate your annotation workflow to achieve precise and reliable results. Recommended Articles Medical Image Segmentation: A Complete Guide 6 Best Open Source Annotation Tools for Medical Imaging Guide to Experiments for Medical Imaging in Machine Learning 7 Ways to Improve Medical Imaging Dataset Future for Computer Vision in Healthcare

Jun 23 2023

5 M

Search Anything Model: Combining Vision and Natural Language in Search

In the current AI boom, one thing is certain: data is king. Data is at the heart of the production and development of new models; and yet, the processing and structuring required to get data to a form that is consumable by modern AI are often overlooked. One of the most primordial elements of intelligence that can be leveraged to facilitate this is search. Search is crucial to understanding data: the more ways to search and group data, the more insights you can extract. The greater the insights, the more structured the data becomes. Historically, search capabilities have been limited to uni-modal approaches: models used for images or videos in vision use cases have been distinct from those used for textual data in natural language processing. With GPT-4’s ability to process both images and text, we are only now starting to see the potential impacts of performant multi-modal models that span various forms of data. Embracing the future of multi-modal data, we propose the Search Anything Model. The unified framework combines natural language, visual property, similarity, and metadata search together in a single package. Leveraging computer vision processing, multi-modal embeddings, LLMs, and traditional search characteristics, Search Anything allows for multiple forms of structured data querying using natural language. If you want to find all bright images with multiple cats that look similar to a particular reference image, Search Anything will match over multiple index types to retrieve data of the requisite form and conditions. What is Natural Language Search? Natural Language Search (NLS) uses human-like language to query and retrieve information from databases, datasets, or documents. Unlike traditional keyword-based searches, NLS algorithms employ Natural Language Processing (NLP) techniques to understand the context, semantics, and intent behind user queries. By interpreting the query’s meaning, NLS systems provide more accurate and relevant search results, mimicking how humans communicate. The computer vision domain requires a similar general understanding of data content without requiring metadata for visuals. 💡Encord is a data-centric computer vision company. With Encord Active, you can use the Search Anything Model to explore, curate, and debug your datasets. What Can You Use the Search Anything Model for? Let’s dive into some examples of computer vision uses for the Search Anything Model. Data Exploration Search Anything simplifies data exploration by allowing users to ask questions in plain language and receive valuable insights. Instead of manually formulating complex queries and algorithms that may require pre-existing metadata, you can pose questions such as: “Which images are blurry?” Or “How is my model performing on images with multiple labels?” Search Anything interprets these queries to provide visualizations or summaries of the data quickly and effectively to gain valuable insights. Data Curation Search Anything streamlines data curation, making the process highly efficient and user-friendly. Filter, sort, or aggregate data using only natural language commands For example, you can request the following: “Remove all the very bright images from my dataset” Or “Add an ‘unannotated’ tag to all the data that has not been annotated yet.” Search Anything processes these commands, automatically performs the requested actions, and presents the curated data all without complex coding or SQL queries. Using Encord Active to filter out bright images in the COCO dataset. Use the bulk tagging feature to tag all the data. Data Debugging Search Anything expedites the process of identifying and resolving data issues. To investigate anomalies to inconsistencies, ask questions or issue commands such as: “Are there any missing values for the image difficulty quality metric?” Or “Find records that are labeled ‘cat’ but don’t look like a typical cat.” Once again, Search Anything analyzes the data, detects discrepancies, and provides actionable insights to assist you in identifying and rectifying data problems efficiently. 💡Read to find out how to find and fix label errors with Encord Active. Cataloging Data for E-commerce Search Anything can also enhance the cataloging process for e-commerce platforms. By understanding product photos and descriptions, Search Anything enable users to search and categorize products efficiently, users can ask: . “Locate the green and sparkly shoes.” Search Anything interprets this query, matches the desired criteria with the product images and descriptions, and displays the relevant products, facilitating improved product discovery and customer experience. How to Use Search Anything Model with Encord? At Encord, we are building an end-to-end visual data engine for computer vision. Our latest release, Encord Active, empowers users to interact with visual data only using natural language. Let’s dive into a few use cases: Use Case 1: Data Exploration User Query: “red dress,” “denim jeans,” and “black shirts” Encord Active identifies the images in the dataset that most accurately corresponds to the query. Use Case 2: Data Curation User query: “Display the very bright images” Encord Active displays filtered results from the dataset based on the specified criterion. Read to find out how to choose the right data for your computer vision project. Use Case 3: Data Debugging User Query: “Find all the non-singular images?” Encord Active detects any duplicated images in the dataset, and displays images that are not unique within the dataset. Can I Use My Own Model? Yes, Encord Active allows you to leverage your models. Through fine-tuning or integrating custom embedding models, you can tailor the search capabilities to your specific needs, ensuring optimal performance and relevance. 💡At Encord, we are actively researching how to fine-tune LLMs for the purpose of searching Encord Active projects efficiently. Get in touch if you would like to get involved. Conclusion Natural Language Search is revolutionizing the way we interact with data, enabling intuitive and efficient exploration, curation, and debugging. By harnessing the power of NLP and computer vision models, our Search Anything Model allows you to pose queries, issue commands, and obtain actionable insights using human-like language. Whether you are an ML engineer, a data scientist, or an e-commerce professional, incorporating NLS into your workflow can significantly enhance productivity and unlock the full potential of your data.

Jun 20 2023

5 M

How to Automate Data Labeling [Examples + Tutorial]

If you feed an AI model with junk, it’s bound to return the favor. The quality of the data being consumed by an AI algorithm has a direct correlation with its success when it comes to generalizing to new instances; this is the reason data professionals spend 80% of their time during model development, ensuring the data is appropriately prepared, and is representative of the real world. Data labeling is an essential task in supervised learning, as it enables AI algorithms to create accurate input-to-output mappings and build a comprehensive understanding of their environment. Data labeling can consume up to 80% of data preparation time, and at least 25% of an entire ML project is spent labeling. Therefore, efficient data labeling strategies are critical for improving the speed and quality of machine learning model development. 💡Read the blog to learn how to automate your data labeling process. Manual data labeling can be a challenging and error-prone process, as it relies on human judgment and subjective interpretation. Labelers may have different levels of expertise, leading to consistency in the labeling process and reduced accuracy. Moreover, manual data labeling can be time-consuming and expensive, especially for large datasets. This can hinder the scalability and efficiency of AI model development. Integrating automated data labeling into your machine learning projects can be an effective strategy for mitigating the challenges of manual data labeling. By leveraging AI technology to perform data labeling tasks, businesses can reduce the risk of human error, increase the speed and efficiency of model development, and minimize costs associated with manual labeling. Additionally, automated data labeling can help improve the accuracy and consistency of labeled data, resulting in more reliable and robust AI models. Let's take a closer look at automated data labeling, including its workings, advantages, and how Encord can assist you in automating your data labeling process. Using Annotation Tools for Automated Data Labeling Automated data labeling is using software tools and algorithms to automatically annotate or tag data with labels or tags that help identify and classify the data. This process is used in machine learning and data science to create training datasets for machine learning models. “Automated data annotation is a way to harness the power of AI-assisted tools and software to accelerate and improve the quality of creating and applying labels to images and videos for computer vision models.” – Frederik H. The Full Guide to Automated Data Annotation. Annotation tools can be used for automated data labeling by providing a user interface for creating and managing annotations or labels for a dataset. These tools can help to automate the process of labeling data by providing features such as: Auto-labeling: Annotation tools can use pre-built machine learning models or algorithms to generate labels for data automatically. Active learning: Annotation tools can use machine learning algorithms to suggest labels for data based on patterns and correlations in the existing labeled data. Human-in-the-loop: Annotation tools can provide a user interface for human annotators to review and correct the labels generated by the automation process. Quality control: Annotation tools can help to ensure the quality of the labels generated by the automation process by providing tools for validation and verification. Data management: Annotation tools can provide tools for managing and organizing large datasets, including tools for filtering, searching, and exporting data. Organizations can reduce the time and cost required to create high-quality training datasets for machine learning models by using annotation tools for automated data labeling. However, it is important to ensure that the tools used are appropriate for the specific task and that the labeled data is carefully validated and verified to ensure its quality. AI Annotation Tools 💡Check out our curated list of the 9 Best Image Annotation Tools for Computer Vision to discover what other options are on the market. Encord Annotate Encord Annotate is an automated annotation platform that performs AI-assisted image annotation, video annotation, and dataset management; part of the Encord product, alongside Encord Active. The key features of Encord Annotate include: Support for all annotation types such as bounding boxes, polygons, polylines, image segmentation, and more. It incorporates auto-annotation tools such as Meta’s Segment Anything Model and other AI-assisted labeling techniques. It has integrated MLOps workflow for computer vision and machine learning teams Use-case-centric annotations — from native DICOM & NIfTI annotations for medical imaging to SAR-specific features for geospatial data. Easy collaboration, annotator management, and QA workflows — to track annotator performance and increase label quality. Robust security functionality — label audit trails, encryption, FDA, CE Compliance, and HIPAA compliance. Benefits of Automated Data Labeling with AI Annotation Tools The most straightforward way to label data is to implement it manually, where a human user is presented with raw unlabeled data and applies a set of rules to label it. However, this approach has certain drawbacks such as being time-consuming and costly and having a higher probability of natural human error. An alternative approach is to use AI annotation tools to automate the labeling process, which can help address the issues associated with manual labeling by: Increasing accuracy and efficiency: Speed is just as important as being accurate. Yes, an automatic AI annotation tool can process large amounts of images much faster than a human can, but what makes it so effective is its ability to remain accurate, which ensures labels are precise and reliable. Improving productivity and workflow: It’s normal for humans to make mistakes – especially when they are performing the same task for 8 or more hours straight. When you use an AI-assisted labeling tool, the workload is significantly reduced, which means annotating teams can put more focus on ensuring things are labeled correctly the first time around. Reduction in labeling costs and resources: Deciding to manually annotate data means paying someone or a group of people to carry out the task; this means each hour that goes by has a cost, which can quickly become extremely high. An AI-assisted labeling tool may take off some of that load by allowing a human annotation team can manually label a percentage of the data and then have an AI tool do the rest. How to Automate Data Labeling with Encord A step-by-step guide to automating data labeling with Encord: Micro models Micro-models are models that are designed to be overtrained for a specific task or piece of data, making them effective in automating one aspect of data annotation workflow. They are not meant to be good at solving general problems and are typically used for a specific purpose. 💡Read the blog to find out more about micro-models. The main difference between a traditional model and a micro-model is not in their architecture or parameters but in their application domain, the data science practices used to create them, and their ultimate end-use. Step 1: Step 2: Auto-segmentation Auto-segmentation is a technique that involves using algorithms or annotation tools to automatically segment an image or video into different regions or objects of interest. This technique is used in various industries, including medical imaging, object detection, and scene segmentation. For example, in medical imaging, auto-segmentation can be used to identify and segment different anatomical structures in images, such as tumors, organs, and blood vessels. This can help medical professionals to make more accurate diagnoses and treatment plans Auto-segmentation can potentially speed up the image analysis process and reduce the likelihood of human error. However, it is important to note that the accuracy of auto-segmentation algorithms depends on the input data quality and the segmentation task's complexity. In some cases, manual review and correction may still be necessary to ensure the accuracy of the results. 💡Read the explainer blog on Segment Anything Model to understand how foundation models are used for auto-segmentation. Interpolation Interpolation is typically used to fill in missing values or smooth the noise in a dataset. It encompasses the process of estimating the value of a function at points that lie between known data points. Several methods can be used for interpolation in ML such as linear interpolation, polynomial interpolation, and spline interpolation. The choice of interpolation method will depend on the data's characteristics and the project's goals. Step 1: Step 2: Object Tracking Object tracking plays a vital role in various applications like security and surveillance, autonomous vehicles, video analysis, and many more. It’s a crucial component of computer vision that enables machines to track and follow objects in motion Using object tracking, you will be able to predict the position and other relevant information of moving objects in a video or image sequence. Step 1: Step 2: 💡Check out the Complete Guide to Object Tracking Tutorial to for more insight.. Conclusion Supervised machine learning algorithms depend on labeled data to learn how to generalize to unseen instances. The quality of data provided to the model has a significant impact on its final performance, hence it’s vital the data is accurately labeled and representative of the data available in a real-world scenario; this means AI teams often spend a large portion of their time preparing and labeling their data before it reaches the model training phase. Manually labeling data is slow, tedious, expensive, and prone to human error. One way to mitigate this issue is with automated data labeling and annotation solutions. Such tools can serve as a cost-effective way to accurately speed up the process, which in turn improves the team’s productivity and workflow. Ready to accelerate the automation of your data annotation and labeling? Sign-up for an Encord Free Trial: The Active Learning Platform for Computer Vision, used by the world’s leading computer vision teams. AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today. Want to stay updated? Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning. Join our Discord channel to chat and connect. Automated Data Labeling FAQs What are the benefits of automated data labeling? Automated data labeling helps to increase the accuracy and efficiency of the labeling process in contrast to when it’s performed by humans. It also reduces labeling costs and resources as you are not required to pay labelers to perform the tasks. How is automated data labeling different than manual labeling? Manual data labeling is the process of using individual annotators to assign labels to raw data. Opposingly, automated labeling is the same thing but the responsibility is passed on to machines instead of humans to speed up the process and reduce costs. What is AI data labeling? AI data labeling refers to a technique that leverages machine learning to provide one or more meaningful labels to raw data (e.g., images, videos, etc.). This is done with the intent of offering a machine learning model with context to learn input-output mappings from the data and make inferences on new, unseen data.

May 19 2023

4 M

Object Classification with Caltech 101

Object classification is a computer vision technique that identifies and categorizes objects within an image or video. In this article, we give you all the information you need to apply object detection to the Caltech 101 Dataset. Object classification involves using machine learning algorithms, such as deep neural networks, to analyze the visual features of an image and then make predictions about the class or type of objects present in the image. What is Object classification? Object classification is often used in applications such as self-driving cars, where the vehicle must be able to recognize and classify different types of objects on the road, such as pedestrians, traffic signs, and other vehicles. Object classification for self-driving cars Source It’s also used in image recognition tasks, such as identifying specific objects within an image or detecting anomalies or defects in manufacturing processes. Object classification algorithms typically involve several steps, including feature extraction and classification. In the feature extraction step, the algorithm identifies visual features such as edges, shapes, and patterns that are characteristic of the objects in the image. These features are then used to classify the objects into predefined classes or categories, such as "car", "dog", "person", etc. The classification step involves using machine learning algorithms, such as deep neural networks, to analyze the visual features of an image and predict the class or type of object present in the image. The model is trained on a large dataset of labeled images, where the algorithm weights are adjusted iteratively to minimize the error between the predicted and actual labels. Once trained, a computer vision (CV) or machine learning (ML) model can be used to classify objects in new images by analyzing their visual features and predicting the class of the object. Object classification is a challenging task due to the variability in object appearance caused by factors such as lighting, occlusion, and pose. However, advances in machine learning and computer vision techniques have significantly improved object classification accuracy in recent years, making it an increasingly important technology in many fields. Importance of Object Classification in Computer Vision Object classification is a fundamental component of many computer vision applications such as autonomous vehicles, facial recognition, surveillance systems, and medical imaging. Here are some reasons why object classification is important in computer vision: Object classification enables algorithmic models to interpret and understand the visual world around them. By identifying objects within an image or video, ML models can extract meaningful information, such as object location, size, and orientation, and use this information to make informed decisions. Object classification is critical for tasks such as object tracking, object detection, and object recognition. These tasks are essential in applications such as autonomous vehicles, where machines must be able to detect and track objects such as pedestrians, other vehicles, and obstacles in real-time. Object classification is a key component of image and video search. By classifying objects within images and videos, machines can accurately categorize and index visual data, making searching and retrieving relevant content easier. Object classification is important for medical imaging, where it can be used to detect and diagnose diseases and abnormalities. For example, object classification can be used to identify cancerous cells within a medical image, enabling early diagnosis and treatment. Overall, object classification is an important task in computer vision, which enables machines to understand and interpret the visual world around them, making it a crucial technology in a wide range of applications. Caltech 101 Dataset Caltech 101 is a very popular dataset for object recognition in computer vision. It contains images from 101 object categories like “helicopter”, “elephant”, and “chair”, etc, and background categories that contain the images not from the 101 object categories. There are about 40 to 400 images for each object category, while most classes have about 50 images. Images in the Caltech101 dataset Object recognition algorithms can be divided into two groups: recognition of individual objects, and categories. The training of a machine learning model for individual object recognition is easier. But to build a lightweight, light-invariant, and viewpoint variant model, you need a diverse dataset. Categories are more general, require more complex representations, and are more difficult to learn. The appearance of objects within a given category may be highly variable; therefore the model should be flexible enough to handle this. Many machine learning practitioners or researchers use Caltech 101 dataset to benchmark the state-of-the-art object recognition models. The images in all 101 object categories are captured under varying lighting conditions, backgrounds, and viewpoints, making the dataset a good candidate for training a robust computer vision model. Apart from being used for training object recognition algorithms, Caltech 101 is also used for various other tasks like fine-grained image classification, density estimation, semantic correspondence, unsupervised anomaly detection, and semi-supervised image classification. More examples of images from Caltech101 For example, Caltech 101 was used in the paper AutoAugment:Learning augmentation policies from data. This paper proposes a procedure called AutoAugment to automatically search for improved data augmentation policies. Here, they test the transferable property of the augmentation policies by transferring the policies learned on ImageNet to Caltech 101. About the Dataset Research Paper: Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories Authors: Fei-Fei Li, Marco Andreetto, Marc ‘Aurelio Ranzato and Pietro Perona Dataset Size: 9146 Categories: 101 Resolution: ~300200 pixels Dataset Size: 1.2 GB License: Creative Common Attribution 4.0 International Release: September 2003 Webpage: Caltech webpage, TensorFlow webpage, Torchvision webpage Advantages of using Caltech 101 There are several advantages of using Caltech 101 over similar object recognition datasets, such as: Uniform size and presentation: Most of the images within each category are uniform in image size and in the relative position of objects of interest. Low level of clutter/occlusion: Object recognition algorithms usually store features unique to the object. With a low level of clutter and occlusion, the features would be unique and transferable as well. High-quality annotations: The dataset comes with high-quality annotations which are collected from real-world scenarios, making it a more realistic dataset. High-quality annotations are crucial for classification tasks as they provide the ground truth labels necessary to train and evaluate machine learning algorithms, ensuring the accuracy and reliability of the results. Disadvantages of the Caltech 101 There are a few trade-offs with the Caltech 101 dataset which include: Uniform dataset: The images in the dataset are very uniform and usually not occluded. Hence, object recognition models solely trained on this dataset might not perform well in real-world applications. Limited object classes: Although the dataset contains 101 object categories, it may not be representative of all possible object categories which can limit the dataset’s applicability to real-world scenarios. For example, medical images, industrial objects like machinery, tools, or equipment, or cultural artifacts like artworks, historical objects, or cultural heritage sites. Aliasing and artifacts due to manipulation: Some images have been rotated and scaled from their original orientation, and suffer from some amount of aliasing. However, analyzing a dataset for a computer vision model requires more detailed information about the dataset. It helps in determining if the dataset is fit for your project. With Encord Active you can easily explore the datasets/labels and its distribution. We get information about the quality of the data and labels. Understanding the data and label distribution, quality, and other related information can help computer vision practitioners determine if the dataset is suitable for their project and avoid potential biases or errors in their models. How to download the Caltech 101 Dataset? Since it’s a popular dataset, there are few dataset loaders available. PyTorch If you want to use Pytorch for downloading the dataset, please follow the documentation. torchvison.datasets.Caltech101() TensorFlow If you are using TensorFlow for building your computer vision model and want to download the dataset, please follow the instructions in the link. To load the dataset as a single Tensor, (img_train, label_train), (img_test, label_test) = tfds.as_numpy(tfds.load('caltech101', split=['train', 'test'], batch_size = -1, as_supervised=True)) And its source code is tfds.datasets.caltech101.Builder You can also find this here. Encord Active We will be downloading the dataset using Encord Active here in this blog. It is an open-source active learning toolkit that helps in visualizing the data, evaluating computer vision models, finding model failure modes, and much more. Run the following commands in your favorite Python environment with the following commands: python3.9 -m venv ea-venv source ea-venv/bin/activate # within venv pip install encord-active Or you can follow through the following command to install Encord Active using GitHub: pip install git+https://github.com/encord-team/encord-active To check if Encord Active has been installed, run: encord-active --help Encord Active has many sandbox datasets like the MNIST, BDD100K, TACO datasets, and much more. The Caltech101 dataset is one of them. These sandbox datasets are commonly used in computer vision applications for building benchmark models. Now that you have Encord Active installed, let’s download the Caltech101 dataset by running the command: encord-active download The script asks you to choose a project, navigate the options ↓ and ↑ select the Caltech-101 train or test dataset, and hit enter. The dataset has been pre-divided into a training set comprising 60% of the data and a testing set comprising 40% of the data for the convenience of analyzing the dataset. Easy! Now, you got your data. In order to visualize the data in the browser, run the command: cd /path/to/downloaded/project encord-active visualize The image below shows the webpage that opens in your browser showing the data and its properties. Let’s analyze the properties we can visualize here. Visualize the data in your browser (data = Caltech-101 training data-60% of Caltech-101 dataset) Data Quality of the Caltech 101 Dataset We navigate to the Data Quality → Summary page to assess data quality. The summary page contains information like the total number of images, image size distribution, etc. It also gives an overview of how many issues Encord Active has detected on the dataset and tells you which metric to focus on. The summary tab of Data Quality The Data Quality → Explorer page contains detailed information about different metrics and the data distribution with each metric. A few of the metrics are discussed below: 2D Embeddings In machine learning, a 2D embedding for an image is a technique to transform high-dimensional image data into a 2D space while preserving the most important features and characteristics of the image. The 2D embedding plot here is a scatter plot with each point representing a data point in the dataset. The position of each point on the plot reflects the relative similarity or dissimilarity of the data points with respect to each other. For example, select the box or the Lasso Select in the upper right corner of the plot. Once you select a region, you can visualize the images only in the selected region. 2D embedding plot in Encord Active using the Caltech101 dataset The use of a 2D embedding plot is to provide an intuitive way of visualizing complex high-dimensional data. It enables the user to observe patterns and relationships that may be difficult to discern in the original high-dimensional space. By projecting the data into two dimensions, the user can see clusters of similar data points, outliers, and other patterns that may be useful for data analysis. The 2D embedding plot can also be used as a tool for exploratory data analysis, as it allows the user to interactively explore the data and identify interesting subsets of data points. Additionally, the 2D embedding plot can be used as a pre-processing step for machine learning tasks such as classification or clustering, as it can provide a compact representation of the data that can be easily fed into machine learning algorithms. Area The area metric is calculated as the product of image width and image height. Here, the plot shows a wide range of image areas, which indicates that the dataset has diverse image sources. It may need pre-processing to normalize or standardize the image values to make them more comparable across different images. After 100,000 pixels, there are quite a few images. These images are very large compared to the rest of the dataset. The plot also reveals that after 100,000 pixels, there are few data points. These outliers need to be removed or pre-processed before using them for training. An example of images of people's faces in the dataset, using Encord Active to tag them to assess data quality Aspect Ratios Aspect ratio is calculated as the ratio of image width to image height. Here, the data distribution shows that the aspect ratio varies from 0.27 to 3.88. This indicates that the dataset is very diverse. Aspect ratios The distribution of images with an aspect ratio from 1.34 to 1.98 has the largest density. The rest are the outliers that need to be processed or removed. Normalizing the aspect ratios ensures that all images have the same size and shape. This helps in creating a consistent representation of the data which is easier to process and work with. When the aspect ratios are not normalized, the model has to adjust to the varying aspect ratios of the images in the dataset, leading to longer training times. Normalizing the aspect ratios ensures that the model learns from a consistent set of images, leading to faster training times. Image Singularity The image singularity metric gives each image a score that shows each image’s uniqueness in the dataset. A score of zero indicates that the image is a duplicate of another image in the dataset, while a score close to one indicates that the image is more unique, i.e., there are no similar images to that. This metric can be useful in identifying duplicate and near-duplicate images in a dataset. Near-duplicate images are images that are not exactly the same but contain the same object which has been shifted/rotated/blurred. Overall, it helps in ensuring that each object is represented from different viewpoints. Image singularity is important for small datasets like Caltech101 because, in such datasets, each image carries more weight in terms of the information it provides for training the machine learning model so duplicate images may create a bias. When the dataset is small, there are fewer images available for the model to learn from, and it is important to ensure that each image is unique and provides valuable information for the model. Also, small datasets are more prone to overfitting, which occurs when the model learns to memorize the training data rather than generalize to new data. This can happen if there are too many duplicate or highly similar images in the dataset, as the model may learn to rely on these images rather than learning generalizable features. By using the image singularity metric to identify and remove duplicate or highly similar images, we can ensure that the small dataset is diverse and representative of the objects or scenes we want the model to recognize. This can help to prevent overfitting and improve the generalizability of the model to new data. In order to find the exact duplicates, select the image singularity filter and set the score to 0. We observe that the Caltech101 dataset contains 46 exact duplicates. Image singularity metric showing the exact-duplicate images. Setting the score from 0-0.1, we get the exact duplicates and the near-duplicates. The near-duplicates as also visualized side-by-side so that it is easier to filter them out. Depending on the size of the dataset, it is important to select different thresholds to filter out the near duplicates. For visualization, we have selected a score from 0-0.1 for visualizing near-duplicate images. Selecting different thresholds to filter out the near duplicates The range consists of 3281 images, which accounts for nearly 60% of the training dataset. It's crucial to have these annotations verified by the annotator or machine learning experts to determine whether or not they should be retained in the dataset. Blur Blurring an image refers to intentionally or unintentionally reducing the sharpness or clarity of the image, typically by averaging or smoothing nearby pixel values. It is often added for noise reduction or privacy protection. However, blurred images have negative effects on the performance of object recognition models trained on such images. This is because blur removes or obscures important visual features of objects, making it more difficult for the model to recognize them. For example, blurring can remove edges, texture, and other fine-grained details that are important for distinguishing one object from another. Hence for object recognition models, blur is an important data quality metric. This is because blurring can reduce the image's quality and usefulness for training the model. Assessing Blur for data distribution This data distribution shows blurred images. The blurriness here is computed by applying a Laplacian filter to each image and computing the variance of the output. The distribution above shows that from -1400 to -600 there are few outliers. By detecting and removing these blurred images from the dataset, we can improve the overall quality of the data used to train the model, which can lead to better model performance and generalization. Example of blurred images. Label Quality of the Caltech 101 Dataset To assess label quality, we navigate to the Label Quality→Summary tab and check out the overall information about the label quality of the dataset. Information such as object annotations, classification annotations, and the metrics to find the issues in the labels can be found here. The summary page of label quality. The Explorer page has information about the labeled dataset on the metric level. The dataset can be analyzed based on each metric. The metrics which showed as a red flag on the summary page would be a good starting point for the label quality analysis. Navigating to Label Quality→Explorer, gets you to the explorer page. Image-level Annotation Quality The image-level annotation quality metric compares the image classifications against similar objects. This is a ratio where the value 1 shows that the annotation is correct whereas the value between 0-1 indicates a discrepancy in the annotation and the image classification. Using the data distribution plot to assess image-level annotation quality The data distribution plot clearly shows that there are outliers whose image level annotation quality is questionable. Setting the filter score between 0-0.01 we get 92 images that have label errors. This is a significant level of label errors. These errors occur due to a variety of reasons such as human error, inconsistencies in labeling criteria, etc. Label errors can affect the quality and accuracy of computer vision models that use the dataset. Incorrect labels can cause the model to learn inaccurate patterns and make inaccurate predictions. With a small dataset like Caltech101, it is important to fix these label errors. This shows the class-level distribution of the label errors. The class butterfly and yin-gang have the most label errors. In the above image, we can see how there is a discrepancy between the annotation and what similar objects are tagged in the class butterfly. Having an annotator review these annotations, will improve the label quality of the dataset for building a robust object recognition model. Object Class Distribution The distribution of classes plot below so shows that classes like airplanes, faces, watch, and barrels are over-represented in the Caltech101 dataset. The classes below the median are undersampled. Hence, this dataset is imbalanced. Class imbalance can cause problems in any computer vision model because they tend to be biased towards the majority class, resulting in poor performance for the minority class. The model may have high accuracy overall, but it may miss important instances of the minority class, leading to false negatives. If you want to know how to balance your dataset, please read the blog 9 Ways to balance your computer vision dataset. Balancing your computer vision dataset 2D Embeddings The 2D embedding plot in label quality shows the data points of each image and each color represents the class the object belongs. This helps in finding out the outliers by spotting the unexpected relationships or possible areas of model bias for object labels. This plot also shows the separability of the dataset. A separable dataset is useful for object recognition because it allows for the use of simpler and more efficient computer vision models that can achieve high accuracy with relatively few parameters. 2D embedding plot in label quality Here, we can see, the different classes of objects are well-defined and can be easily distinguished from each other using a simple decision boundary. Hence, the Caltech 101 dataset is separable. A separable dataset is a useful starting point for object recognition, as it allows us to quickly develop and evaluate simple machine learning models before exploring more complex models if needed. It also helps us better understand the data and the features that distinguish the different classes of objects, which can be useful for developing more sophisticated models in the future. Model Quality Analysis We have trained a benchmark model on the training dataset of Caltech101 and will be evaluating the model's performance on the testing dataset. We need to import the predictions to Encord Active to evaluate the model. To find out how to import your predictions into Encord Active, click here. After training the benchmark model, it is natural to be eager to assess its performance. By importing your predictions into Encord Active, the platform will automatically compare your ground truth labels with the predictions and provide you with useful insights about the model’s performance. Some of the information you can obtain includes: Class-specific performance results Precision-Recall curves for each class, as well as class-specific AP/AR results Identification of the 25+ metrics that have the greatest impact on the model’s performance Detection of true positive and false positive predictions, as well as false negative ground truth objects The quality analysis of the model here is done on the test data, which is the 40% of the Caltech101 dataset not used in training. Assessing Model Performance Using Caltech 101 The model accuracy comes to 83% on the test dataset. The mean precision comes to 0.75 and the recall of 0.71. This indicates that the object recognition model is performing fairly well, but there is still room for improvement. Precision refers to the proportion of true positives out of all the predicted positives, while recall refers to the proportion of true positives out of all the actual positives. A precision of 0.75 means that out of all the objects the model predicted as positive, 75% were actually correct. A recall of 0.71 means that out of all the actual positive objects, the model correctly identified 71% of them. While an accuracy of 83% may seem good, it’s important to consider the precision and recall values as well. Depending on the context and task requirements, precision and recall may be more important metrics to focus on than overall accuracy. Assessing Model Performance Using Caltech 101 Performance metrics Our initial aim is to examine which quality metrics have an impact on the performance of the model. If a metric holds significant importance, it suggests that any changes in that metric would considerably influence the model’s performance. Here we see that image singularity, area, Image-level annotation and aspect ratio are the important metrics. These metrics affect positively or negatively on the model. For example: Image Singularity Image singularity negatively affects the benchmark model as more images are more unique. Similarly, the model learns object classes with similar images (not exact duplicates). Hence it is harder for the model to learn those patterns. Area Image area positively affects the benchmark model. High area values mean that images in the dataset have high resolution. High-resolution images provide more information for the classification model to learn. On the other hand, if dealing with limited computational resources, these high-resolution images can affect the benchmark model negatively. High-resolution images require large amounts of memory and processing power making them computationally expensive. Image-level Annotation This metric is useful to filter out the images which are hard for the model to learn. A low score represents hard images whereas a score close to 1 represents the high-quality annotated images that are easy for the model to learn. The high-quality image-level annotations also have no label errors. Precision-Recall Precision and recall are two common metrics used to evaluate the performance of the computer vision model. Precision measures the proportion of true positives out of all the predicted positives. Recall, on the other hand, measures the proportion of true positives out of all the actual positives. Precision and recall are often used together to evaluate the performance of a model. In some cases, a high precision score is more important than recall (e.g., in medical diagnoses where a false positive can be dangerous), while in other cases a high recall score is more important than precision (e.g., in spam detection where missing an important message is worse than having some false positives). It's worth noting that precision and recall are trade-offs; as one increases, the other may decrease. For example, increasing the threshold for positive predictions may increase precision but decrease recall, while decreasing the threshold may increase recall but decrease precision. It's important to consider both metrics together and choose a threshold that balances precision and recall based on the specific needs of the problem being solved. We can refer to the average F1 score on the model performance page to consider both metrics. The F1 score is a measure of the model’s precision and recall. The f1 score is computed for each class in the dataset and averaged across all classes. This provides an insight into the performance of the model across all classes. The precision-recall plot gives the overview of the precision and recall of all classes of objects in our dataset. We can see some of the classes like “Garfield”, and “binocular”, and others, have huge differences, and hence the threshold need to be balanced for a high-performing model. Precision-recall plot Confusion matrix A confusion matrix is a table that is often used to evaluate the performance of a machine learning model on a classification task. It summarizes the predicted labels and the actual labels for a set of test data, allowing us to visualize how well the model is performing. The confusion matrix of the Caltech101 dataset The confusion matrix of the Caltech101 dataset shows the object classes which are often confused. For example, the image below shows that the class Snoopy and Garfield are often confused with each other. Object class snoopy is confused with Garfield Performance By Metric In the performance by metric tab, as the name suggests we can find the true positive rate of different metrics of the predictions. It indicates the proportion of actual positive cases that are correctly identified as positive by the model. We want the true positive rate to be high in all the important metrics (found in the model performance). Earlier, we saw that area, image singularity, etc, are some of the important metrics. The plot below shows the predictions' true positive rate with image singularity as a metric. An example of an average true positive rate The average true positive rate is 0.8 which is a good metric and indicates that the baseline model trained is robust. Misclassifications Going to the Model Quality→Explorer tab, we find the filter to visualize the objects which have been wrongly predicted. We can also find out the misclassifications in each class. Conclusion In this blog, we explored the topic of object classification with a focus on the Caltech 101 dataset. We began by discussing the importance of object classification in computer vision and its various applications. We then introduced the Caltech 101 dataset. We also discussed the importance of data quality in object recognition and evaluated the data quality of the Caltech 101 dataset using the Encord Active tool. We looked at various aspects of data quality, including aspect ratios, image area, blurred images, and image singularity. Furthermore, we evaluated the label quality of the dataset using 2D embedding and image annotation quality. Next, we trained a benchmark object classification model using the Caltech 101 dataset and analyzed its performance on Encord Active. We also evaluated the quality metrics of the model and identified the misclassified objects in each class. Ready to improve the performance and scale your object classification models? Sign-up for an Encord Free Trial: The Active Learning Platform for Computer Vision, used by the world’s leading computer vision teams. AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today. Want to stay updated? Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning. Join the Slack community to chat and connect. Further Steps To further improve the performance of object classification models, there are several possible next steps that can be taken. Firstly, we can explore more sophisticated models that are better suited for the specific characteristics of the Caltech 101 dataset. Additionally, we can try incorporating other datasets or even synthetic data to improve the model's performance. Another possible direction is to investigate the impact of various hyperparameters, such as learning rate, batch size, and regularization, on the model's performance. We can also experiment with different optimization techniques, such as stochastic gradient descent, Adam, or RMSprop, to see how they affect the model's performance. Finally, we can explore the use of more advanced evaluation techniques, such as cross-validation or ROC curves, to better understand the model's performance and identify areas for improvement. By pursuing these further steps, we can continue improving the accuracy and robustness of object classification models, which will significantly impact various fields such as medical imaging, autonomous vehicles, and security systems.

May 05 2023

7 M

Grounding-DINO + Segment Anything Model (SAM) vs Mask-RCNN: A comparison