Contents

Top Picks for Computer Vision Papers This Month

Developer Resources You’d Find Useful

Encord Blog

Encord Monthly Wrap: March Industry Newsletter

Written by

Stephen Oladele

View more postsHi there,

Welcome to the Computer Vision Monthly Wrap for March 2024!

Here’s what you should expect:

- 🍏 MM1 - Methods, analysis, and insights from multimodal LLM pre-training by researchers at Apple.

- 📸 HyperLLaVA for developing adaptable and efficient AI systems that can excel across various multimodal tasks.

- 📽️ Understanding Mora, an open-source alternative to OpenAI’s text-to-video model.

- ⚒️ Developer resources to use for your next vision AI application.

- ☁️ Top 15 image segmentation repos for your next segmentation applications.

- 🤖 Google’s Video Gaming Companion: Scalable Instructable Multiworld Agent [SIMA].

Let’s dive in!

Top Picks for Computer Vision Papers This Month

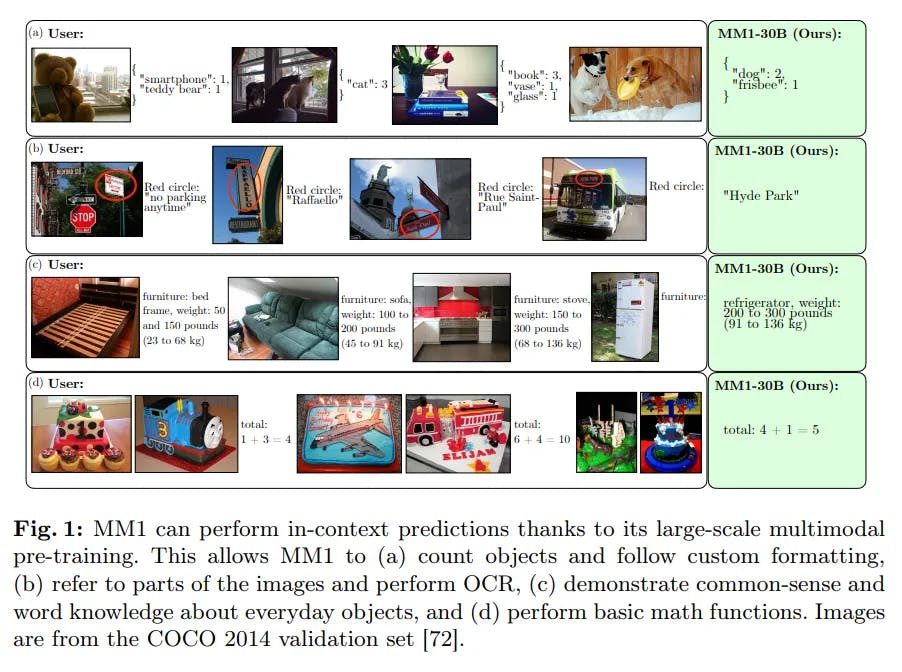

MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training

This paper from Apple researchers is an in-depth analysis of multimodal large language model (MLLM) pre-training. They focused on developing efficient models by exploring architectural components and data selection strategies.

The study shows how integrating different kinds of data—such as text-only data, interleaved image-text, and image-caption pairs—can improve few-shot learning performance on a range of benchmarks. It is a big step forward for AI's ability to understand and process complex multimodal inputs.

What’s impressive? 🤯

- The researchers scaled the model using Mixture of Experts (MoE) and dense model variants, which shows its complex architecture and how it can improve performance by smartly distributing computing resources. This is crucial for ensuring the model can work well in many real-world applications.

- The model's superior few-shot learning performance across several benchmarks indicates impressive improvements in how AI learns from limited data and interleaved data, which could help us build agile and adaptable AI systems.

- The 30B (billion) parameter-dense model beats prior state-of-the-art (SOTA) on VQA (Visual Question Answering) dataset and captioning tasks.

How can you apply it? ⚒️

- If you are conducting multimodal AI research, consider applying insights from MM1's architectural decisions, training recipes, and data strategies to improve how you develop new AI models.

- You can use the model for creative tasks like generating and curating context-aware content across different media. This will make it easier for people to create interesting and useful content.

- If you are building recommendation engines, use them to analyze user preferences across different media types for more personalized content suggestions.

📜 Read the paper on Arxiv. If that’s a lot, we also put out an explainer that helps you quickly get to the important bits. It provides a walkthrough on how to use the open-source YOLOv9 release to create custom datasets.

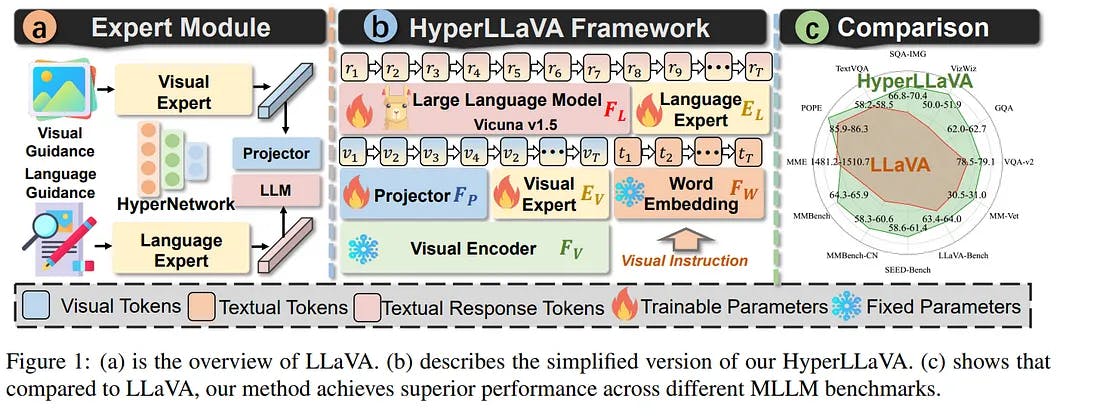

HyperLLaVA: Dynamic Visual and Language Expert Tuning for

Multimodal Large Language Models

Advancements in Multimodal Large Language Models (MLLMs) have shown that scaling them up improves their performance on downstream multimodal tasks. But the current static tuning strategy may constrain their performance across different tasks.

This paper discusses HyperLLaVA, a framework that circumvents the problems with static tuning methods by letting visual and language experts dynamically tune both the projector (which turns visual data into a format that language models can understand) and the LLM parameters.

What’s impressive? 👀

- It uses a unique training methodology that first aligns visual-language features and then refines language model tuning with multimodal instructions, optimizing the model’s comprehension and responsiveness.

- It shows amazing progress in MLLM benchmarks (MME, MMBench, SEED-Bench, and LLaVA-Bench), which opens the door for AI systems that are more nuanced, adaptable, and capable of handling complex multimodal data.

- Unlike static models, HyperLLaVA uses HyperNetworks to adaptively generate parameters for projectors and LLMs based on input, which helps with task-specific optimizations.

📜 Read the paper on Arxiv.

Google’s Video Gaming Companion: Scalable Instructable Multiworld Agent [SIMA]

How do you train an AI agent to be a generalist? Google DeepMind’s latest AI agent, SIMA, short for Scalable Instructable Multiworld Agent, helps us understand precisely how.

SIMA interacts with the environment in real-time using a generic human-like interface. It receives image observations and language instructions as inputs and generates keyboard and mouse actions as outputs. SIMA is trained on a dataset of video games, including Satisfactory, No Man's Sky, Goat Simulator 3, and Valheim.

Here is an explainer post that distills the technical paper with the most important bits you need to know.

MORA: The Advanced Multi-Agent Video Generation Framework

Mora is a multi-agent framework designed for generalist video generation. Based on OpenAI's Sora, it aims to replicate and expand the range of generalist video generation tasks. It distinguishes itself from Sora by integrating several visual AI agents into a cohesive system. Here are the video generation tasks it can do:

1️⃣ Text ➡️ Video

2️⃣ Text + Image ➡️ Video

3️⃣ Extending Videos 📈

4️⃣ Text + Video ➡️ Video

5️⃣ Video merging 🤝

6️⃣ Simulating digital worlds 🤖

Here is an explainer post that distills the technical paper with the most important bits you need to know.

Developer Resources You’d Find Useful

- Gemini 1.5 Pro API Support in AI Studio for Developers → Google started rolling out Gemini 1.5 Pro support for developers! This means you can start developing AI apps with Gemini 1.5 Pro, which comes with a standard 128,000 token context window, and you can build with the 1M token context window!

- 15 Interesting GitHub Repositories for Image Segmentation → If you are building an application involving image segmentation, this article includes 15 GitHub repositories that showcase different approaches to segmenting complex images.

- The Generative AI In-Vehicle Experience Powered by NVIDIA DRIVE → In a recent video, NVIDIA unveiled a new in-vehicle AI experience powered by NVIDIA DRIVE. This multimodal AI assistant can perceive, reason with, and assist drivers with features like surround visualization, access to a knowledge base, and the ability to read and understand text. This new experience will likely help with developing more context-aware autonomous vehicle systems.

Here are other quick finds if you 💓Encord and computer vision data stuff ⚡:

- Join the Encord Community to discuss this newsletter.

- Data-centric computer vision blog.

Till next month, have a super-sparkly time!

Build better ML models with Encord

Get started todayWritten by

Stephen Oladele

View more posts